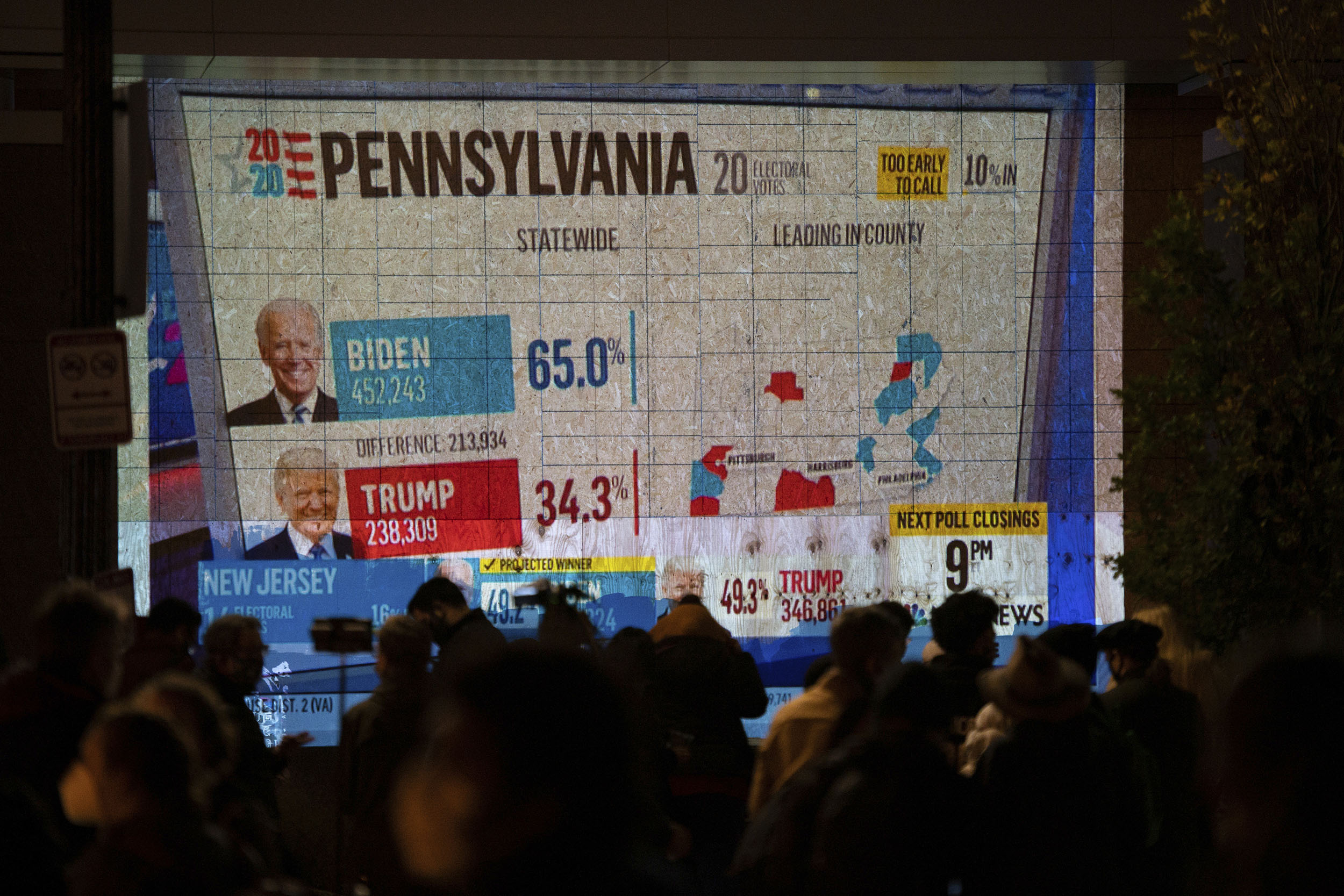

A screen in Black Lives Matter Plaza, near the White House, shows the results as they were updated on Election Day. Former Vice President Joe Biden headed into Election Day with a sizable national polling advantage over President Donald Trump.

Graeme Sloan/Sipa USA via AP Images

The problems (and promise) of polling

Harvard experts weigh the good and bad of political predictions

Did the polls hoodwink us again as they did in 2016? It’s complicated.

For the past 48 hours, voters have fumed over what they consider another round of flawed opinion research and forecasting that again gave a Democratic candidate (this time former Vice President Joe Biden instead of Hillary Clinton) a sizeable lead heading into Tuesday. Based on that information, and fueled by accompanying hopes or fears, millions watched, increasingly bleary-eyed and incredulous, as yet another election night dragged into the next day with no clear winner in sight.

“As usual, the answer is somewhere in between,” said Ryan Enos, a government professor and faculty associate of the Institute for Quantitative Social Science. “It’s clear people are going to be exaggerating how much the polls got it wrong. But it’s also clear that they didn’t get it exactly right. And they certainly didn’t get it as right as we might have liked, given the enormity of the stakes right now.”

Both Republicans and Democrats insist their complaints are justified. They haven’t forgotten key polls that favored Clinton, and how Donald Trump decisively won the Electoral College with 304 votes to Clinton’s 227. (Clinton did win the popular vote with 2.8 million more ballots cast in her favor, pollsters are quick to note.)

Trump, who remains behind Biden in the ongoing electoral vote count, blasted the polls Wednesday, tweeting, “The ‘pollsters’ got it completely & historically wrong!” Twitter intervened, tagging his message with the line, “Some or all of the content shared in this Tweet is disputed and might be misleading about an election or other civic process.”

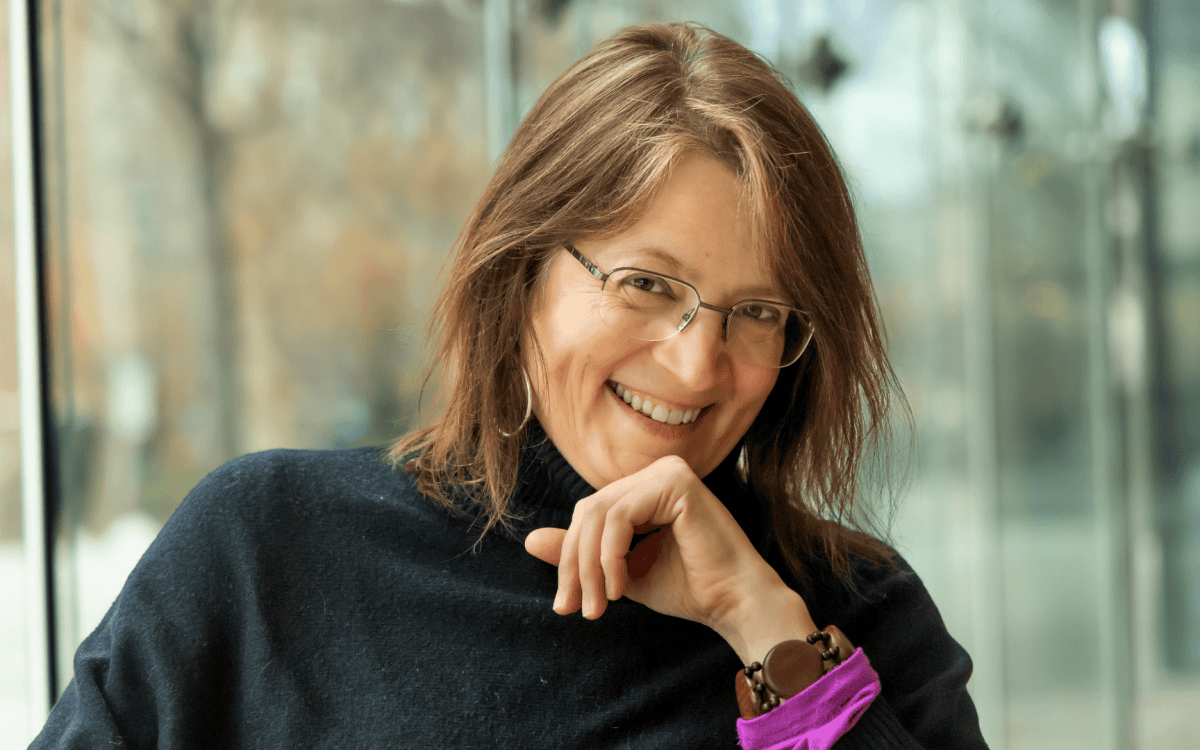

“… they certainly didn’t get it as right as we might have liked, given the enormity of the stakes right now,” says Ryan Enos.

Kris Snibbe/Harvard file photo

But while it may seem easy to again point fingers at poorly crafted surveys for the tighter-than-expected race, the accuracy and utility of polling, like the unfolding 2020 election result, is more complicated. On the one hand, many of the polls that predicted Biden winning in certain states were correct, said Enos. And many of them also accurately predicted close races in others. “So we shouldn’t jump to the conclusion that the polls were wrong, because they sort of delivered the information they were supposed to deliver,” he said.

On the other hand, he added, “There are going to be really big misses,” both in terms of the polling for congressional seats and the presidency. He singled out Susan Collins’ Senate race in Maine, where surveys consistently had the incumbent down by 10 percentage points (Collins appears to have won by about 9), and the presidential race in Michigan, where pollsters steadily put Biden up by 9. (The Associated Press called the Midwest state for Biden, with a winning margin of just under 3 percentage points.)

What’s important to keep in mind, said Enos, is that polling, is “really, really hard,” and when you’re trying to estimate population opinion by a very specific group of people in a very specific place, under restrictions from a pandemic like COVID-19, and voter enthusiasm attached to polarizing political figure like Trump, the “harder it is to get right.”

“We don’t exactly have the technology available to us in the polling industry right now to get these answers as right as people would like them.”

Despite the frustration voiced by many, Chase H. Harrison, preceptor in survey research in the department of government and associate director of the Program on Survey Research at the Institute for Quantitative Social Science, agrees with Enos that the 2020 polling may not have been that far off.

“My sense heading into the 2020 election was that the forecast models had a narrow possibility that you would see something like a huge Democratic sweep; a narrow possibility that Donald Trump would win the election; and somewhat of a more likely possibility that there would be some number of states voting for Biden,” said Harrison, who cautions that without the full vote count completed, it’s still too soon to determine whether polls were, in fact, truly misleading.

In 2016, poor poll design gave Clinton a huge edge, Harrison told the Gazette in September. He pointed out that many polls in the battleground states of Wisconsin, Michigan, and Pennsylvania underestimated the level of support for Trump. Typically people with college degrees have higher response rates to surveys, and pollsters failed to sufficiently factor in the gap in education levels between many Trump and Clinton supporters. This year Harrison believes polling organizations have corrected those errors and put more comprehensive predictive modeling systems in place.

“I’m not saying that you shouldn’t do any polls, but I think other ways of gathering information should be developed and given more privilege,” suggests political scientist Theda Skocpol.

File photo by Aaron Ye

National polls may have been largely on target, but that appears to be less true for state-level surveys, Harrison said, which is critical given the state-based nature of voting in the U.S. But until counting is truly complete it will be difficult to determine how off the calls may be. “We are going to want to take a look at Wisconsin because the good-quality polls seem to have a stronger Biden lead than we have … it seems as if we might have had the same problem in Wisconsin as we did [in 2016].”

Going forward, one of the biggest problems may be the proliferation of low-quality polls and a general public that lacks the statistical training to sort out how that may figure in. For example, taking the average from a range of credible polls to come up with a number like “plus 7” for a particular candidate can lead many people to assume the outcome is all but predetermined and to be surprised when the election goes another way. “We have to take a look at whether the problem is with the surveys we’re doing, if the problem is with the election forecasting, or if the problem is we just have unreasonable expectations from some of these large aggregating models,” said Harrison.

Political scientist Theda Skocpol isn’t ready to give up entirely on polling just yet, but she does think the current process, which often relies on dinnertime robocalls, “artificially constructed” focus groups, and oversimplified voter categories, needs a serious overhaul. “The whole way we think about what’s going to happen politically is not based on talking to people or observing people in their contexts,” said Skocpol, Harvard’s Victor S. Thomas Professor of Government and Sociology. “It’s based on these methods of data collection, and also thinking about the data, which aren’t working anymore.”

For Skocpol, what works is something she has done for the past several years while researching her most recent book, “Upending American Politics: Polarizing Parties, Ideological Elites, and Citizen Activists from the Tea Party to the Anti-Trump Resistance,” with co-editor Caroline Tervo. Together Skocpol and Tervo made repeated trips to eight counties in four swing states — North Carolina, Pennsylvania, Ohio, and Wisconsin — developing close relationships with people on the ground. Skocpol sees similar grassroots efforts as the key to effective polling in the future.

“I’m not saying that you shouldn’t do any polls, but I think other ways of gathering information should be developed and given more privilege. And they’re going to be more slow-moving and less national. … When I want to know what’s going on in one of my counties, and I can’t go there, I get in touch with a couple of newspaper people there who I know who still are in touch with their world,” said Skocpol.

One Harvard scholar thinks polling and predictions set the public up for weeks and months of needless worry when there is likely a better way to help voters parse the numbers and avoid the angst.

A more accessible framing of polling data and analysis based on “relative frequency” could help cut down on the public’s misinterpretations, says Steven Pinker.

Harvard file photo

“Many people spent the run-up to the election on tenterhooks trying to make sense of the strange claim that Biden had a 90 percent chance of winning,” said cognitive psychologist and Johnstone Family Professor Steven Pinker, referencing statistician Nate Silver’s FiveThirtyEight.com, which gave Trump only a 10 percent chance of victory just days before the election. “Should they be relieved at the odds or terrified by the possibility? What does the probability of a single event mean? The election happens once — it’s not as if we have 100 runs in which Biden would win 10. Even mathematicians and philosophers disagree over what ‘probability’ means — frequency in the long run, degree of subjective credence, proportion of logical possibilities, evidential warrant?”

A more accessible framing of polling data and analysis based on “relative frequency” could also help cut down on the public’s misinterpretations, said Pinker, and avoid the confusion often associated with trying “to interpret numbers assigned to single events.”

More like this

“It’s easier to understand your chance of having a disease given a positive test result if the situation is explained in terms of how many people out of 1,000 have the disease, how many of them truly and falsely test positive, and so on, compared to giving people a bunch of probabilities that apply to a single hypothetical patient,” said Pinker. “It’s no wonder that people don’t know how to wrap their minds around a decimal fraction assigned to an outcome in an election that happens only once.”

To get at which polls did well and why will take time, with further clarity not expected before the end of the month, but there could be some key lessons to be learned from further scrutiny and study of state-level polling, Harrison said.

“For those who did a lot of polls that were consistently right across states, we’re going to take a look at what they did in terms of method and turnout. And people who were consistently wrong across multiple states, especially high-quality polls, we’ll take a look at what they did. And if they’re doing different things, it’ll teach us something about how to predict things in the future.”