Earthquake data is less shaky

Improvements made in determining quake location

There are people in Los Angeles, accountants and writers and teachers, who have become so accustomed to feeling the ground shake that they make a sport of trying to determine every earthquake’s point of origin, betting that they can call it within a certain number of miles or dinner is on them. More often than not, they lose, and when their predictions do match those of seismologists, Michael Antolik would likely tell you, it’s probably a matter of good old-fashioned luck. Antolik, a postdoctoral fellow in the Department of Earth and Planetary Sciences, is working to improve the locating of earthquakes. No, he can’t move them from one place to another, but he is making them easier to find.

Pinpointing the epicenter of an earthquake is not as easy as it sounds. Conventional one-dimensional seismic-velocity models often fall short of the mark, particularly in terms of depth, mislocating seismic events by an average of about seven kilometers. In a three-year study funded in part by the American military’s Defense Threat Reduction Agency, Antolik has developed a new simulation model that consistently positions events with greater precision, reducing the mislocation to only six kilometers on average.

One kilometer’s difference might not seem like much, but in this case it could help prevent an international incident. Antolik’s research is primarily devoted to successful monitoring of the Comprehensive Nuclear Test Ban Treaty endorsed by the United Nations in 1996. (Though the treaty has been signed by 165 countries, it has been ratified by only 31, and the United States is not yet among them, despite its decade-long moratorium on testing.)

“When you set off a bomb,” Antolik says, “nuclear or otherwise, you create seismic waves just like an earthquake, so they can be recorded by seismographs. Under the treaty all nuclear tesing is prohibited, so if a signatory to the treaty happened to set off a bomb it would be in violation.” Accurately locating seismic events can help the treaty organization determine whether anyone is cheating on the agreement, under whose terms any country can ask for an on-site inspection within a radius of 18 kilometers from a seismic event of magnitude 4 or greater.

It was hardly the Bay of Pigs, but a rumble off the north coast of Russia a few years ago recalled dormant Cold War tensions. “There was a lot of controversy,” Antolik says, “because they couldn’t figure out precisely where it was. They knew it was very close to a nuclear test site, and it’s a region that doesn’t have very many earthquakes, so the State Department actually almost went so far as to accuse the Russians of setting off a bomb. But eventually they were able to prove it was an earthquake.” They did so by fixing the depth of the event: Bombs are usually detonated within a kilometer or so of the earth’s surface, substantially shallower than an earthquake would be.

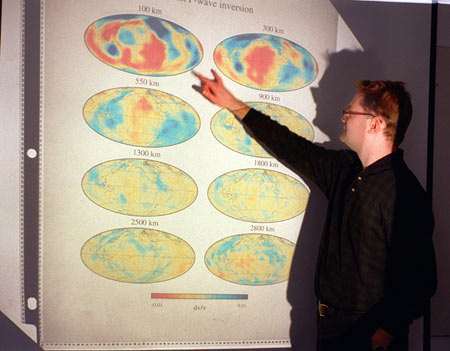

When analyzing the conventional models for locating events, what Antolik found, basically, was a problem with parameterization, or the number and kinds of variables entered into the equation. “When I first came here I tested a number of tomographic models, which are models that measure seismic wave speeds,” he says. “Normally you would expect that the better the resolution in other words, the more finely parameterized the model is the better locations you would get out. But I found that the worst models were actually the ones that were more finely parameterized, and the reason for that, I think, is that the data they used were not thorough enough.”

Previous models used complicated mathematical functions called spherical harmonics to track earthquake locations; Antolik used, instead, functions called splines, which are smoother and less complex, and, perhaps most crucially, take up less computer space. With less memory being devoted to the functions, Antolik was able to enter important data on a larger number of earthquakes.

Since 1977, Harvard has been cataloging quakes of magnitude 5.5 or larger in what has become a standard of seismology, the centroid moment tensor (CMT) project. Antolik took that data the wave forms recorded in 30 or so seismic stations globally for each of about 10,000 earthquakes and combined it, for the first time, with the wave-travel times of several hundred thousand earthquakes recorded since 1964 by the International Seismological Centre in Berkshire, England. “It’s not the first time this kind of joint tomography has been done,” says Antolik, “but I think it’s the most comprehensive study in joint tomography. It uses the largest set of data, and also has a little bit of different parameterization than other people have used.”

Antolik used not only the travel times – that is, how long it takes an earthquake in New Guinea, say, to reach Dallas, New York, Tokyo, and a dozen other cities around the globe but also the primary, or compression; secondary, or shear; and surface, or dispersion, waveforms of each earthquake. He was able to test his model using information from his database of previously recorded explosions with precisely known locations, comparing locations he had obtained with the model to true ones. Now, when there’s a seismic event, he can plug data in to the new model, which tells him about temperature and density fluctuations that affect the travel time of seismic waves, allowing him to pinpoint with greater precision the location of the rumble.

Now if only he could get a piece of the action on those dinner bets.