New kind of AI model gives robots a visual imagination

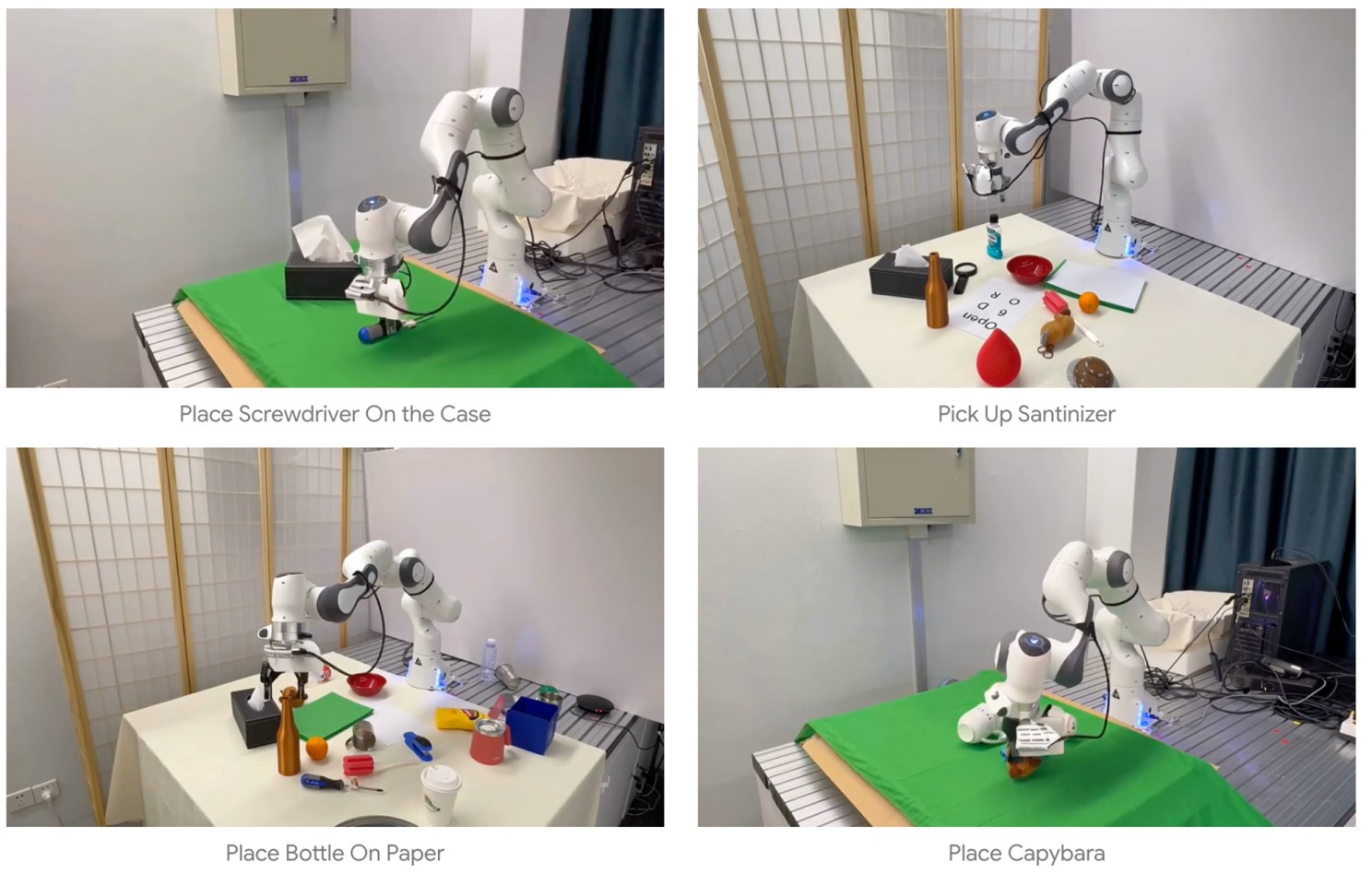

Video stills show a robot gripper generalizing its knowledge to perform specific actions in response to prompts, such as picking up a screwdriver and placing it on a case, or picking up a bottle of hand sanitizer.

Credit: Yilun Du

In a major step toward more adaptable and intuitive machines, Kempner Institute investigator Yilun Du and his collaborators have unveiled a new kind of artificial intelligence system that lets robots “envision” their actions before carrying them out. The system, which uses video to help robots imagine what might happen next, could transform how robots navigate and interact with the physical world.

This breakthrough, described in a new preprint and blog post, marks a shift in how researchers think about robot learning. In recent years, researchers have developed vision-language-action (VLA) systems — a type of robot foundation model that combines sight, understanding, and movement to give robots general-purpose skills, reducing the need for retraining the robot every time it encounters a new task or environment.

Yet even the most advanced systems, most of which rely heavily on large language models (LLMs) to translate words into movement, have struggled to effectively teach robots to generalize knowledge in new situations. So, instead, Du’s team trained its system using video.

“Language contains little direct information about how the physical world behaves,” said Du. “Our idea is to train a model on a large amount of internet video data, which contains rich physical and semantic information about tasks.”