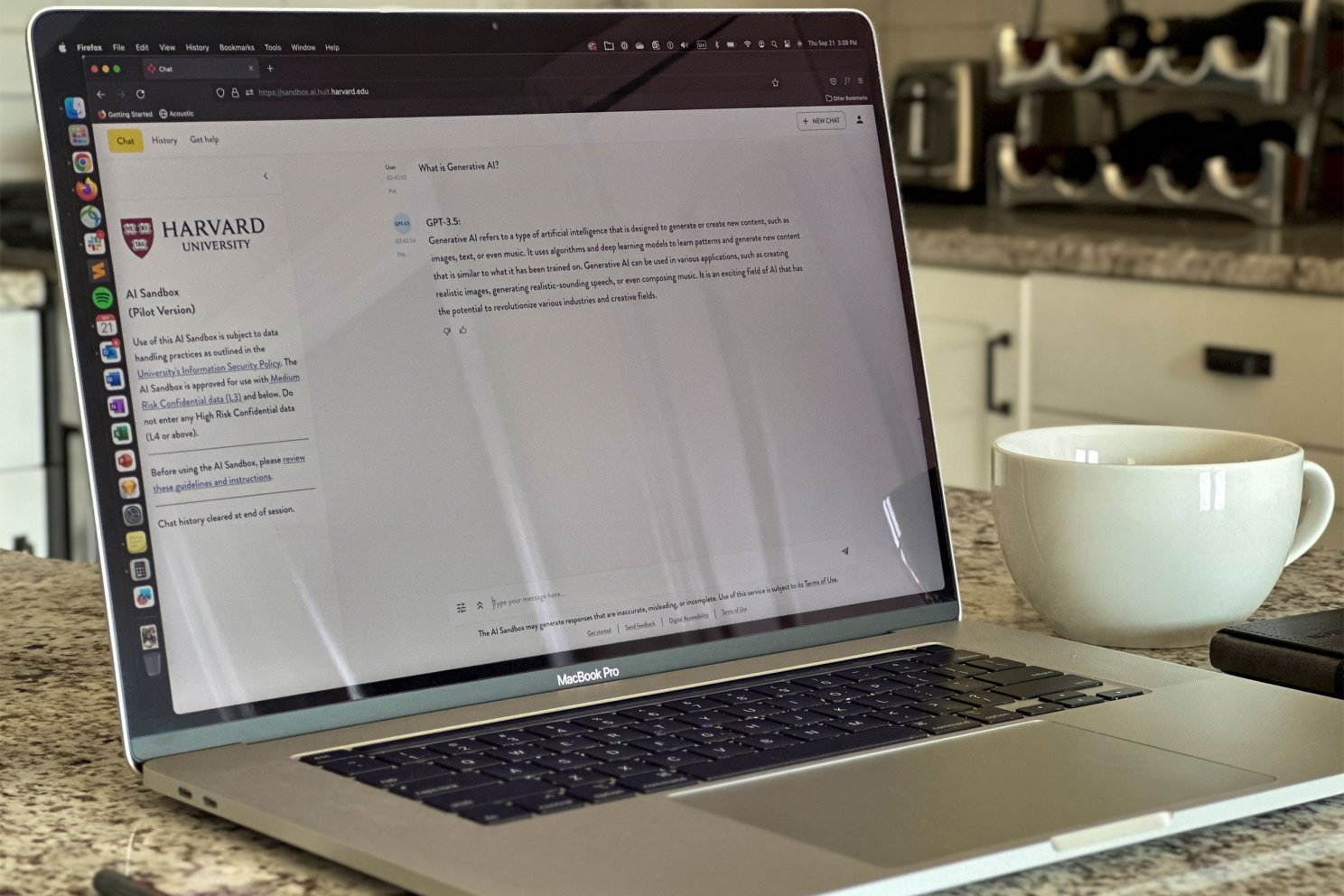

Harvard designs AI sandbox that enables exploration, interaction without compromising security

Generative AI tools such as OpenAI’s ChatGPT, Microsoft’s Bing Chat, and Google’s Bard have rapidly emerged as the most talked-about topic in technology, provoking a range of reactions — from excitement to fear — and sparking conversations about their role in higher education. In July, Harvard announced its initial guidelines for the use of generative AI tools, and strong community demand presented University administrators with the challenge of meeting this emerging need while providing safeguards against security and privacy deficiencies of many consumer tools.

“At Harvard, we want to innovate and be at the vanguard of new technological developments, while ensuring that we honor our commitment to the security and privacy of University data and intellectual property,” said Klara Jelinkova, vice president and University chief information officer.

To address this challenge, Jelinkova, together with Bharat Anand, vice provost for advances in learning (VPAL), and Christopher Stubbs, dean of science in the Faculty of Arts and Sciences (FAS), proposed an idea to a group of faculty and staff advisers: a generative AI “sandbox” environment, designed and developed at Harvard, that would enable exploration of the capabilities of various large language models (LLMs) while mitigating many of the security and privacy risks of consumer tools.

“Our aspiration is to see how we can use generative AI tools and LLMs to elevate, rather than merely preserve or defend, our teaching and learning practices,” explained Anand. “For this, we must be able to try new things, to experiment, to see what one can do with these technologies in new ways. It’s a very important first step here for Harvard.”

“The idea of the sandbox,” Jelinkova added, “is to provide a space for experimentation with multiple LLMs — both commercial and open source — so we can learn more about them to inform our strategic procurement efforts, while meeting an immediate need for our community.”

In late July, a project team — led by Erica Bradshaw, chief technology officer for Harvard, and Emily Bottis, managing director for academic technology at HUIT — was quickly assembled, comprising more than 40 IT professionals in partnership with VPAL, the FAS Division of Science, and faculty and staff advisers from across the University. IT engineers, architects, and administrators came together to design and develop the secure sandbox and supporting materials with the goal of launching a pilot in time for the beginning of the academic year.

“The biggest challenge was meeting the need in the short timeframe, but fortunately we were well positioned to meet this moment,” said Bradshaw. “HUIT’s Emerging Technology and Innovation Program blazed the trail by funding small scale AI experiments that enabled us to prototype and create the sandbox within a matter of weeks.”

“It was invigorating to watch people from across the University coming together to collaborate with a very focused goal and tight timeline,” added Bottis.

The result: an AI sandbox tool with a single interface enabling users to easily switch between four LLMs: Azure OpenAI GPT-3.5 and GPT-4, Anthropic Claude 2, and Google PaLM 2 Bison. The prompts and data entered by the user are viewable only to that individual; they are not shared with LLM vendors, nor can the data be used to train those models. As such, pilot participants are permitted to enter a higher classification of confidential data into the sandbox than consumer tools.

The pilot formally launched on Sept. 1, with an initial group of 50 faculty participants. Approximately half of the initial requests for access were to simply learn how generative AI works. More specific use cases included researching various topics, writing assistance, feedback summarization, and advanced problem-solving, particularly in STEM fields.

“These technologies are only as good as the creativity of the use cases we envision for them”, said Anand. “The range of ideas is already interesting, even in these early days. They cover use cases such as aggregating anonymized reading summaries from different students into one that reflects their diverse opinions, using LLMs to enhance critical thinking and ideation skills, using them as interactive teaching tools or personalized tutors, refining assessments and quizzes, and enhancing course outline or teaching plans for classes.”

John H. Shaw, vice provost for research, also highlighted the “very positive impact” of the sandbox in the University working group on generative AI in Research & Scholarship. “[The sandbox will] inform and enable University recommendations on effective ways to use generative AI to advance our research activities across so many fields.”

“It’s an exciting time,” added Bottis. “Our job is to provide the tools and expertise to support this innovative work — and the sandbox is our first product in this area.”

Some limitations emerged, including vendor rate restrictions necessitating a phased rollout. And some pilot participants highlighted the tendency for LLMs to “hallucinate”: providing confident, plausible-sounding outputs that are nonetheless false, emphasizing the need for discerning use.

In addition to the sandbox, the University will also pursue enterprise agreements with commercial vendors. “In many cases,” said Bradshaw, “consumer generative AI tools with appropriate privacy and security protections may be a more suitable choice. We’re continuing to negotiate with vendors to be able to offer a broader range of tools, and exploring tools where AI is embedded as part of the feature set.”

Jelinkova expects that the University will expand access to the sandbox in the fall semester based on use cases. “By documenting use cases and outcomes, we can use this pilot to inform our broader strategy. Our goal is to learn, and then make that learning visible to faculty and staff leaders across the University who are thinking about how to chart a path forward.”

“This journey promises to be interesting,” said Anand. “And we’re only in the first inning.”