When you do the math, humans still rule

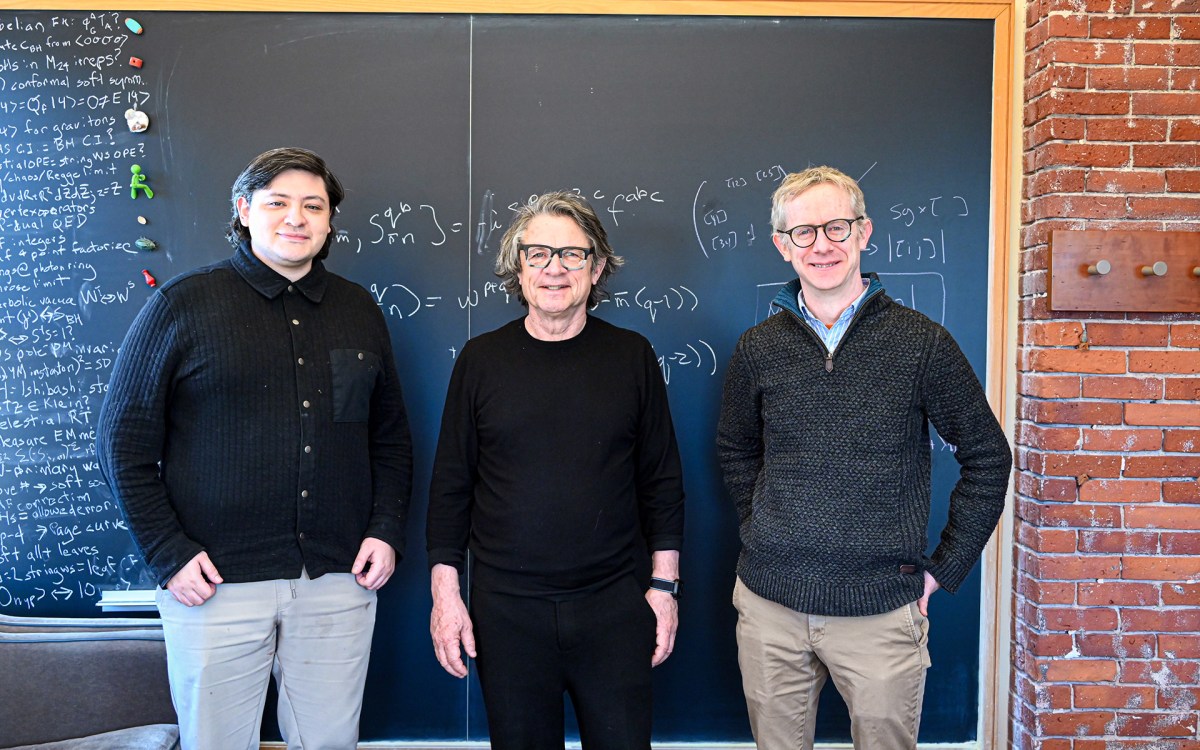

Lauren Williams.

Stephanie Mitchell/Harvard Staff Photographer

Harvard’s Lauren Williams, a MacArthur ‘genius,’ joins international effort to challenge notions of AI supremacy

Have reports of AI replacing mathematicians been greatly exaggerated?

Artificial intelligence has attained an impressive series of feats — solving problems from the International Math Olympiad, conducting encyclopedic surveys of academic literature, and even finding solutions to some longstanding research questions. Yet these systems largely remain unable to match top experts in the conceptual frontiers of research math.

Now a Harvard professor and other world-renowned mathematicians have launched a grand experiment to more clearly define the boundary between artificial and human intelligence. These scholars have challenged AI companies to crack a series of tough problems that the mathematicians themselves recently have solved but kept under wraps. The effort seeks to answer a key question: Where has AI achieved mastery and where does human intelligence still reign supreme?

“This is a tricky question to answer because the capabilities of AI are improving all the time,” said Lauren Williams, Dwight Parker Robinson Professor of Mathematics at Harvard, who recently won a genius grant from the MacArthur Foundation. “But, at least at the moment, AI is not so good at making a creative leap and solving problems far outside the kinds of problems that already have been solved.”

Williams is among a team of 11 mathematicians — including a Fields medalist and two MacArthur geniuses — who are organizing First Proof. The project seeks to create a more objective methodology for evaluating the ability of AI systems to solve research math questions.

Without a doubt, AI systems have made strides in mathematics. In 2024, a system created by Google DeepMind solved problems on the International Math Olympiad at a level on par with a silver medalist.

But not all efforts have been so successful. One recent analysis showed that large language models (LLMs) managed to solve a small fraction of research-level math problems, but were prone to logical errors, fundamental misconceptions, and hallucinations of existing results. Some researchers have concluded that AI tools currently are most useful for assisting with grunt work — such as literature reviews — but not solving big research problems autonomously.

The First Proof project was initiated by Mohammed Abouzaid, a professor of mathematics at Stanford University. He said many of the highly touted demonstrations of AI capabilities in math “did not really reflect my experience as a mathematician.”

Tech companies, he said, tend to focus on results that they can measure with automated, scalable systems. They often recast research questions into forms that can be answered by current technologies — but not necessarily the approaches research mathematicians would take. In addition, much of the research has been conducted by parties with vested interests.

So the team of mathematicians — from institutions including Harvard, Columbia, Duke, Yale, UC Berkeley, and the University of Texas at Austin — decided it was time for an independent evaluation. In December, they met in Berkeley to assemble research problems that they had recently solved but not yet published. Their 10 problems represent a diverse span of mathematics including number theory, algebraic combinatorics, spectral graph theory, symplectic topology, and numerical linear algebra.

The solutions — each no more than about five pages — have been encrypted and stored within a secure depository. The authors publicly unveiled the problems on Feb. 5 and will reveal the solutions on Feb. 13.

Experts will compare the proofs produced by mathematicians against those produced by AI (problems may be solved in more than one way). The organizers plan to issue another set of problems later this year.

In preliminary tests with GPT 5.2 Pro and Gemini 3.0 Deepthink, the authors reported that, “The best publicly available AI systems struggle to answer many of our questions.” Abouzaid said the AI models solved two of the 10 problems in preliminary tests. “We are already learning a lot by seeing which of our 10 questions it can answer,” he said.

In playing with AI tools, Williams found that they seemed superficially useful but became unreliable at deeper levels.

“Whenever I’ve asked AI a question about which I know very little, the answer generally appears helpful and informative,” she said. “But as I ask questions closer to my own expertise, I’ll start seeing mistakes. If I ask questions close to things I’m working on, it will sometimes hallucinate and start telling me, ‘Oh, the answer to that question is in this paper that I wrote’ — except it’s not a paper that I wrote. Sometimes it will invent references, and the only reason I know they’re not real is because they said I was the author — and I never wrote such a paper.”

Williams said AI sometimes distorted her query. Instead of answering her original question, it shifted to another question that could be answered from the existing literature.

“It can be quite good at mimicking things that have been done before, or putting together some known results to get to a statement that follows from them,” said Williams. “If it’s something algorithmic, it’s excellent.”

But those questions do not represent the forefront of the field.

Typically, research math involves three phases: coming up with a good question, developing a framework for attacking the problem, and solving it. The first two steps remain beyond the reach of AI, so the challenge aims to test only the final one — finding solutions to already-defined problems.

Another co-author, Martin Hairer, professor of pure mathematics at EPFL in Switzerland and Imperial College London and winner of the 2014 Fields Medal, said the group sought to “to push back a bit on the narrative that ‘math has been solved’ just because some LLM managed to solve a bunch of Math Olympiad problems.”

“As of now, this idea of mathematicians being replaced by AI is complete nonsense in my opinion,” said Hairer. “Maybe this will change in the future, but I find it hard to believe that the type of models we’re seeing at the moment will suddenly start producing genuinely new insights.”