Technically, it’s possible. Ethically, it’s complicated.

“Students are basically getting a flavor of humanistic education inside their computer science courses,” said Matthew Kopec, program director for Embedded EthiCS.

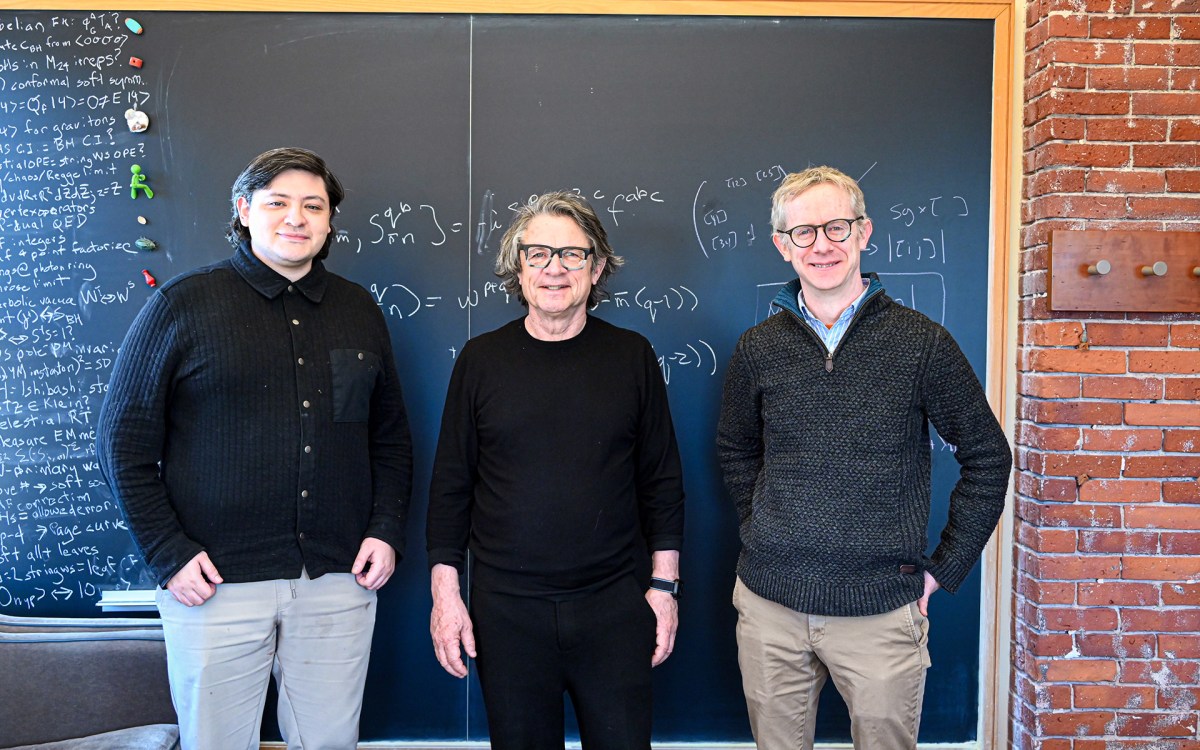

Stephanie Mitchell/Harvard Staff Photographer

Surge in AI use heightens demand for Harvard program that examines social consequences of computer science work

A San Francisco pedestrian was severely injured in 2023 when a driver struck her, throwing her in the path of a self-driving car that dragged her 20 feet while attempting to pull over. In the complex circumstances and legal fallout of the crash, educators saw a learning opportunity for future engineers to grapple with the ethical challenges of emerging technologies.

“We framed the case as a cautionary tale and a challenge for improving design in cases where collisions are unavoidable,” said Michael Pope, who led a discussion on the crash for students in “Planning and Learning Methods in AI,” a course in the John A. Paulson School of Engineering and Applied Sciences. “How should we design systems for these kinds of unpredictable scenarios? How should we think about the training regime and the learning models we’re using in these systems?”

Pope is a postdoctoral fellow with Embedded EthiCS, a program launched in 2017 that brings philosophy scholars into computer science classrooms to help students grapple with the ethical and social implications of their work. This year brings new emphasis on ethics of AI.

“Students are basically getting a flavor of humanistic education inside their computer science courses,” said Matthew Kopec, program director for Embedded EthiCS. “Having conversations in the computer science classroom about these topics that anchor into their core values, where they can actually talk with each other, learn that different individuals have different values, and defend their values. That’s not something that usually happens in a computer science classroom.”

‘I’m just building a robot here’

Courses tackle a range of issues. An ethics module from “Introduction to Computational Linguistics and Natural-Language Processing” had students discuss the responsibilities of companies that create AI chatbots. In “Introduction to Robotics,” they debated the future of work as automation and AI replace certain jobs. In “Advanced Computer Vision” last year, students examined deepfakes and how the same AI technology that’s used to create online misinformation could also be used to authenticate content and restore digital trust.

In “CS50: Introduction to Computer Science,” where engaging in full-group discussions is difficult due to the class size, philosophers worked with the teaching team to insert short ethics explainers and reflection questions into problem sets. One problem set asked students to build a website that included a blurb about ADA accessibility. Another about filtering and recovering file data had a question about why it might be necessary to blur images; students were then asked to describe a scenario in which data recovery might violate someone’s privacy, and how that work could be done responsibly.

“From simple things like building emojis all the way up to large language models, self-driving cars, and autonomous weapon systems, you name it, we’ll come up with some social, ethical implications.”

Huzeyfe Demirtas

David Malan, who teaches the popular entry-level course, said his motivation for incorporating ethics material was to get students thinking about whether the fact that they can do certain things, technologically, means that they should.

“Too often our students, even after they graduate and are in the industry, are perhaps put in situations where they’re asked to do something by their manager or by the company and they don’t necessarily feel they’re in a position to question or challenge it,” Malan said. “What we wanted to do within the class is create an environment in which students are prompted to think well in advance about how and whether to solve certain problems now that they have the technical savvy to do so.”

Huzeyfe Demirtas, a philosophy postdoctoral fellow in Embedded EthiCS, said many of his computer science students are excited to engage with the philosophy curriculum. But some still view computer science as separate from ethics or social responsibility. Those are the students he’s particularly interested in reaching.

“It’s not so infrequently that we hear things like, ‘Well, I’m just coding’ or ‘I’m just building a robot here,’” said Demirtas, whose own research focuses on questions of moral responsibility, free will, and the metaphysics of causation. “That’s a bit misguided. From simple things like building emojis all the way up to large language models, self-driving cars, and autonomous weapon systems, you name it, we’ll come up with some social, ethical implications.”

Demirtas taught an ethics module this month for the computer science course “Systems Security,” in which students discussed how a company should respond to an attack on its system from an outside hacker. Is it morally OK to booby-trap your own data with a bug that would destroy a hacker’s mainframe? What are the ethics of hacking the hacker to retrieve stolen data?

Demand explodes with ChatGPT use

Kopec said interest in Embedded EthiCS has skyrocketed among computer science faculty. In the early days of the program, it took considerable effort on behalf of co-founders Barbara Grosz and Alison Simmons to convince computer science faculty to add a philosophy module to their already-packed curriculums, he said. Now, demand outweighs the capacity of Embedded EthiCS to run them.

“I haven’t run into a CS professor who doesn’t think that computer science has ethical implications anymore,” Kopec said. “Because of the rapid change with ChatGPT and how it upended everybody’s life, everybody is now convinced that we need to rush to get more ethical reasoning skills and more ethical reflection into the student populations, especially students who are learning computer science. Now the question is, ‘How do you do it, and how do you do it quickly?’”

“Because of the rapid change with ChatGPT … everybody is now convinced that we need to rush to get more ethical reasoning skills and more ethical reflection into the student populations.”

Matthew Kopec

It’s a question that other colleges and universities are considering as well. Embedded EthiCS has helped other institutions, including Stanford University, University of Toronto, Technion – Israel Institute of Technology, and University of Nebraska–Lincoln, launch responsible computing programs. With National Science Foundation funding, Harvard has also helped nine members of The Computing Alliance of Hispanic-Serving Institutions, including two California State University campuses and New Mexico State University, pilot ethics programs focused on AI.

Kopec recommends the model of inserting ethics modules in pre-existing computer science curricula because it can be implemented fast to keep pace with AI development without having to wait for a faculty hiring, curriculum building, or course approvals process.

“Over the course of one week we can get all these teams together, train them up really quickly on what an approach like ours is, and then they can leave with all the tools that they need to get a program like that up and running at their own institution,” he said.

While the program gives computer science students a new perspective, it also offers an opportunity for the philosophy researchers to discover new areas of interest. Demirtas’ forthcoming paper examines the so-called “Explainable AI” problem: We often don’t know how complex AI systems make decisions, so what are the ethical implications when those enigmatic “black boxes,” are used to make policing or healthcare decisions?

“I think it’s definitely a two-way street. The research in tech ethics is just getting better overall. Research in philosophy is definitely improving the more we interact with computer scientists,” Kopec said. “I also think that various areas of computer science research will be better informed, because those computer scientists are interacting with philosophers when they’re doing the research.”