What will AI mean for humanity?

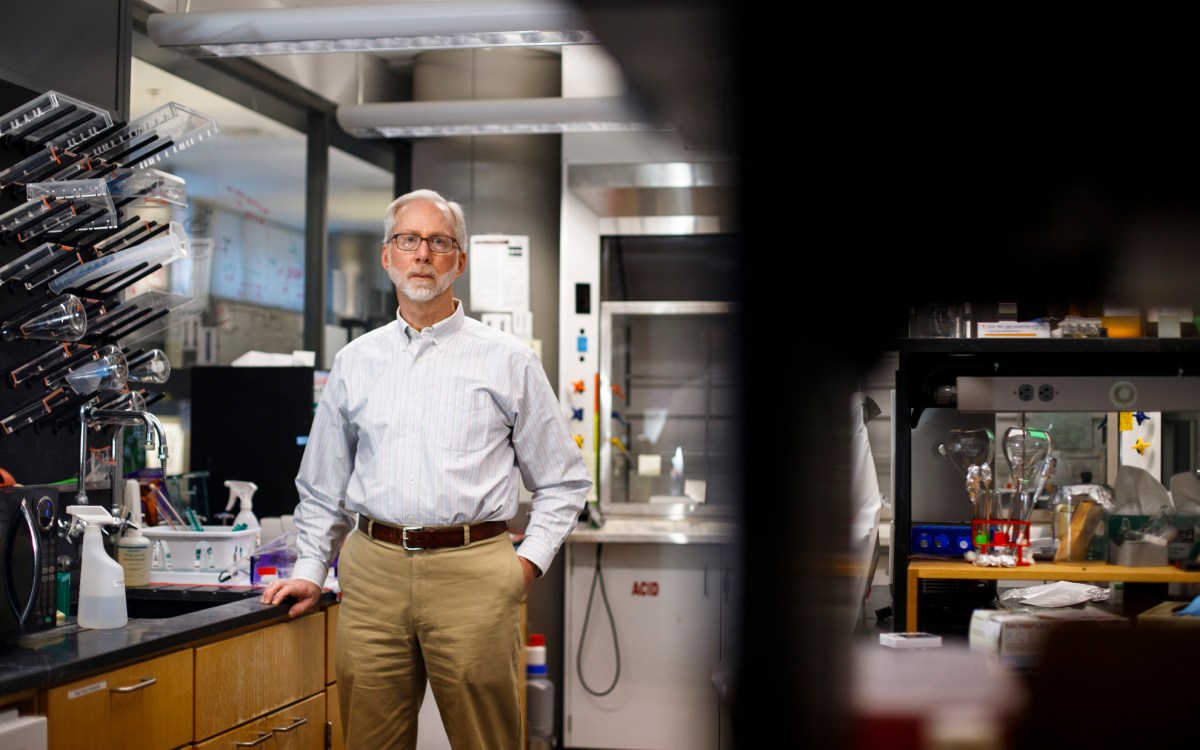

E. Glen Weyl (second from right), shares his more optimistic view of technology during the panel discussion “How Is Digital Technology Shaping the Human Soul?” Panelists included Moira Weigel (from right), Nataliya Kos’myna, Brandon Vaidyanathan, and moderator Ian Marcus Corbin.

Photos by Veasey Conway/Harvard Staff Photographer

Scholars from range of disciplines see red flags, possibilities ahead

What does the rise of artificial intelligence mean for humanity? That was the question at the core of “How is digital technology shaping the human soul?,” a panel discussion that drew experts from computer science to comparative literature last week.

The Oct. 1 event was the first from the Public Culture Project, a new initiative based in the office of the dean of arts and humanities. Program Director Ian Marcus Corbin, a philosopher on the neurology faculty of Harvard Medical School, said the project’s goal was putting “humanist and humanist thinking at the center of the big conversations of our age.”

“Are we becoming tech people?” Corbin asked. The answers were varied

“We as humanity are excellent at creating different tools that support our lives,” said Nataliya Kos’myna, a research scientist with the MIT Media Lab. These tools are good at making “our lives longer, but not always making our lives the happiest, the most fulfilling,” she continued, listing examples from the typewriter to the internet.

Generative AI, specifically ChatGPT, is the latest example of a tool that essentially backfires in promoting human happiness, she suggested.

She shared details of a study of 54 students from across Greater Boston whose brain activity was monitored by electroencephalography after being asked to write an essay.

One group of students was allowed to use ChatGPT, another permitted access to the internet and Google, while a third group was restricted to their own intelligence and imagination. The topics — such as “Is there true happiness?” — did not require any previous or specialized knowledge.

The results were striking: The ChatGPT group demonstrated “much less brain activity.” In addition, their essays were very similar, focusing primarily on career choices as the determinants of happiness.

The internet group tended to write about giving, while the third group focused more on the question of true happiness.

Questions illuminated the gap. All the participants were asked whether they could quote a line from their own essays, one minute after turning them in.

“Eighty-three percent of the ChatGPT group couldn’t quote anything,” compared to 11 percent from the second and third groups. ChatGPT users “didn’t feel much ownership,” of their work. They “didn’t remember, didn’t feel it was theirs.”

“Your brain needs struggle,” Kos’myna said. “It doesn’t bloom” when a task is too easy. In order to learn and engage, a task “needs to be just hard enough for you to work for this knowledge.”

E. Glen Weyl, research lead with Microsoft Research Special Projects, had a more optimistic view of technology. “Just seeing the problems disempowers us,” he said, urging instead for scientists to “redesign systems.”

He noted that much of the current focus on technology is on its commercial aspect. “Well, the only way they can make money is by selling advertising,” he said, paraphrasing prevailing wisdom before countering it. “I’m not sure that’s the only way this can be structured.”

“Underlying what we might call scientific intelligence there is a deeper, spiritual intelligence — why things matter.”

Brandon Vaidyanathan

Citing works such as Steven Pinker’s new book, “When Everyone Knows That Everyone Knows,” Weyl talked about the idea of community — and how social media is more focused on groups than on individuals.

“If we thought about engineering a feed about these notions, you might be made aware of things in your feed that come from different members of your community. You would have a sense that everyone is hearing that at the same time.”

This would lead to a “theory of mind” of those other people, he explained, opening our sense of shared experiences, like that shared by attendees at a concert.

To illustrate how that could work for social media, he brought up Super Bowl ads. These, said Weyl, “are all about creating meaning.” Rather than sell individual drinks or computers, for example, we are told “Coke is for sharing. Apple is for rebels.”

“Creating a common understanding of something leads us to expect others to share the understanding of that thing,” he said.

To reconfigure tech in this direction, he acknowledged, “requires taking our values seriously enough to let them shape” social media. It is, however, a promising option.

Moira Weigel, an assistant professor in comparative literature at Harvard, took the conversation back before going forward, pointing out that many of the questions discussed have captivated humans since the 19th century.

Weigel, who is also a faculty associate at the Berkman Klein Center for Internet and Society, centered her comments around five questions, which are also at the core of her introductory class, “Literature and/as AI: Humanity, Technology, and Creativity.”

“What is the purpose of work?” she asked, amending her query to add whether a “good” society should try to automate all work. “What does it mean to have, or find, your voice? Do our technologies extend our agency — or do they escape our control and control us? Can we have relationships with things that we or other human beings have created? What does it mean to say that some activity is merely technical, a craft or a skill, and when is it poesis” or art?

Looking at the influence of large language models in education, she said, “I think and hope LLMs are creating an interesting occasion to rethink what is instrumental. They scramble our perception of what education is essential,” she said. LLMs “allow us to ask how different we are from machines — and to claim the space to ask those questions.”

Brandon Vaidyanathan, a professor of sociology at Catholic University of America, also saw possibility.

Vaidyanathan, the panel’s first speaker, began by noting the difference between science and technology, citing the philosopher Martin Heidegger’s concept of “enframing” has tech viewing everything as “product.”

Vaidyanathan noted that his experience suggests scientists take a different view.

“Underlying what we might call scientific intelligence there is a deeper, spiritual intelligence — why things matter,” he said.

Instead of the “domination, extraction, and fragmentation” most see driving tech (and especially AI), he noted that scientists tend toward “the three principles of spiritual intelligence: reverence, receptivity, and reconnection.” More than 80 percent of them “encounter a deep sense of respect for what they’re studying,” he said.

Describing a researcher studying the injection needle of the salmonella bacteria with a “deep sense of reverence,” he noted, “You’d have thought this was the stupa of a Hindu temple.

“Tech and science can open us up to these kind of spiritual experiences,” Vaidyanathan continued.

“Can we imagine the development of technology that could cultivate a sense of reverence rather than domination?” To do that, he concluded, might require a “disconnect on a regular basis.”