Does AI understand?

Illustration by Liz Zonarich/Harvard Staff

It may be getting smarter, but it’s not thinking like humans (yet), say experts

Imagine an ant crawling in sand, tracing a path that happens to look like Winston Churchill. Would you say the ant created an image of the former British prime minister? According to the late Harvard philosopher Hilary Putnam most people would say no: The ant would need to know about Churchill, and lines, and sand.

The thought experiment has renewed relevance in the age of generative AI. As artificial intelligence firms release ever-more-advanced models that reason, research, create, and analyze, the meanings behind those verbs get slippery fast. What does it really mean to think, to understand, to know? The answer has big implications for how we use AI, and yet those who study intelligence are still reckoning with it.

“When we see things that speak like humans, that can do a lot of tasks like humans, write proofs and rhymes, it’s very natural for us to think that the only way that thing could be doing those things is that it has a mental model of the world, the same way that humans do,” said Keyon Vafa, a postdoctoral fellow at the Harvard Data Science Initiative. “We as a field are making steps trying to understand, what would it even mean for something to understand? There’s definitely no consensus.”

“We as a field are making steps trying to understand, what would it even mean for something to understand? There’s definitely no consensus.”

Keyon Vafa

In human cognition, expression of a thought implies understanding of it, said senior lecturer on philosophy Cheryl Chen. We assume that someone who says “It’s raining” knows about weather, has experienced the feeling of rain on the skin and perhaps the frustration of forgetting to pack an umbrella. “For genuine understanding,” Chen said, “you need to be kind of embedded in the world in a way that ChatGPT is not.”

Still, today’s artificial intelligence systems can seem awfully convincing. Both large language models and other types of machine learning are made of neural networks — computational models that pass information through layers of neurons loosely modeled after the human brain.

“Neural networks have numbers inside them; we call them weights,” said Stratos Idreos, Gordon McKay Professor of Computer Science at SEAS. “Those numbers start by default randomly. We get data through the system, and we do mathematical operations based on those weights, and we get a result.”

He gave the example of an AI trained to identify tumors in medical images. You feed the model hundreds of images that you know contain tumors, and hundreds of images that don’t. Based on that information, can the model correctly determine if a new image contains a tumor? If the result is wrong, you give the system more data, and you tinker with the weights, and slowly the system converges on the right output. It might even identify tumors that doctors would miss.

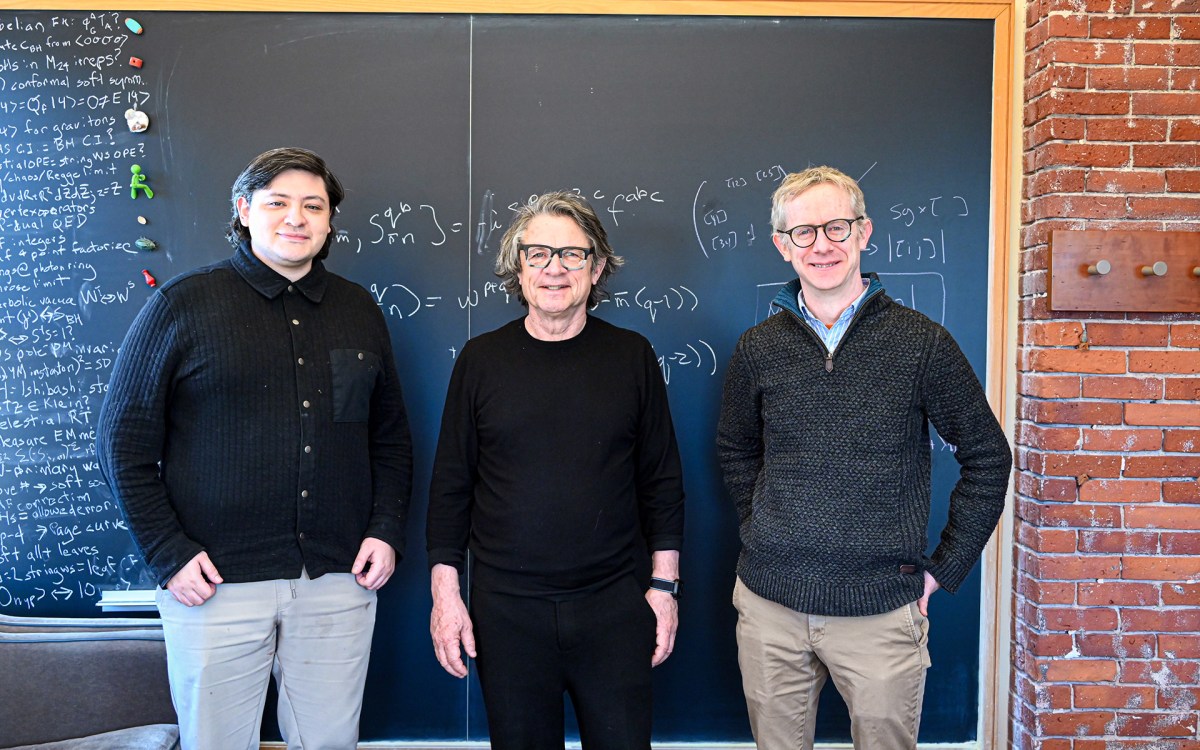

Keyon Vafa.

Niles Singer/Harvard Staff Photographer

Vafa devotes much of his research to putting AI through its paces, to figure out both what the models actually understand and how we would even know for sure. His criteria come down to whether the model can reliably demonstrate a world model, a stable yet flexible framework that allows it to generalize and reason even in unfamiliar conditions.

Sometimes, Vafa said, it sure seems like a yes.

“If you look at large language models and ask them questions that they presumably haven’t seen before — like, ‘If I wanted to balance a marble on top of an inflatable beach ball on top of a stove pot on top of grass, what order should I put them in?’ — the LLM would answer that correctly, even though that specific question wasn’t in its training data,” he said. That suggests the model does have an effective world model — in this case, the laws of physics.

But Vafa argues the world models often fall apart under closer inspection. In a paper, he and a team of colleagues trained an AI model on street directions around Manhattan, then asked it for routes between various points. Ninety-nine percent of the time, the model spat out accurate directions. But when they tried to build a cohesive map of Manhattan out of its data, they found the model had invented roads, leapt across Central Park, and traveled diagonally across the city’s famously right-angled grid.

“When I turn right, I am given one map of Manhattan, and when I turn left, I’m given a completely different map of Manhattan,” he said. “Those two maps should be coherent, but the AI is essentially reconstructing the map every time you take a turn. It just didn’t really have any kind of conception of Manhattan.”

Rather than operating from a stable understanding of reality, he argues, AI memorizes countless rules and applies them to the best of its ability, a kind of slapdash approach that looks intentional most of the time but occasionally reveals its fundamental incoherence.

Sam Altman, the CEO of OpenAI, has said we will reach AGI — artificial general intelligence, which can do any cognitive task a person can — “relatively soon.” Vafa is keeping his eye out for more elusive evidence: that AIs reliably demonstrate consistent world models — in other words, that they understand.

“I think one of the biggest challenges about getting to AGI is that it’s not clear how to define it,” said Vafa. “This is why it’s important to find ways to measure how well AI systems can ‘understand’ or whether they have good world models — it’s hard to imagine any notion of AGI that doesn’t involve having a good world model. The world models of current LLMs are lacking, but once we know how to measure their quality, we can make progress toward improving them.”

Idreos’ team at the Data Systems Laboratory is developing more efficient approaches so AI can process more data and reason more rigorously. He sees a future where specialized, custom-built models solve important problems, such as identifying cures for rare diseases — even if the models don’t know what disease is. Whether or not that counts as understanding, Idreos said, it certainly counts as useful.