Know how those tech moguls want us to go to Mars? Ignore them.

Astrophysicist says they may have more money than you, but they don’t know anything more about future than anyone else

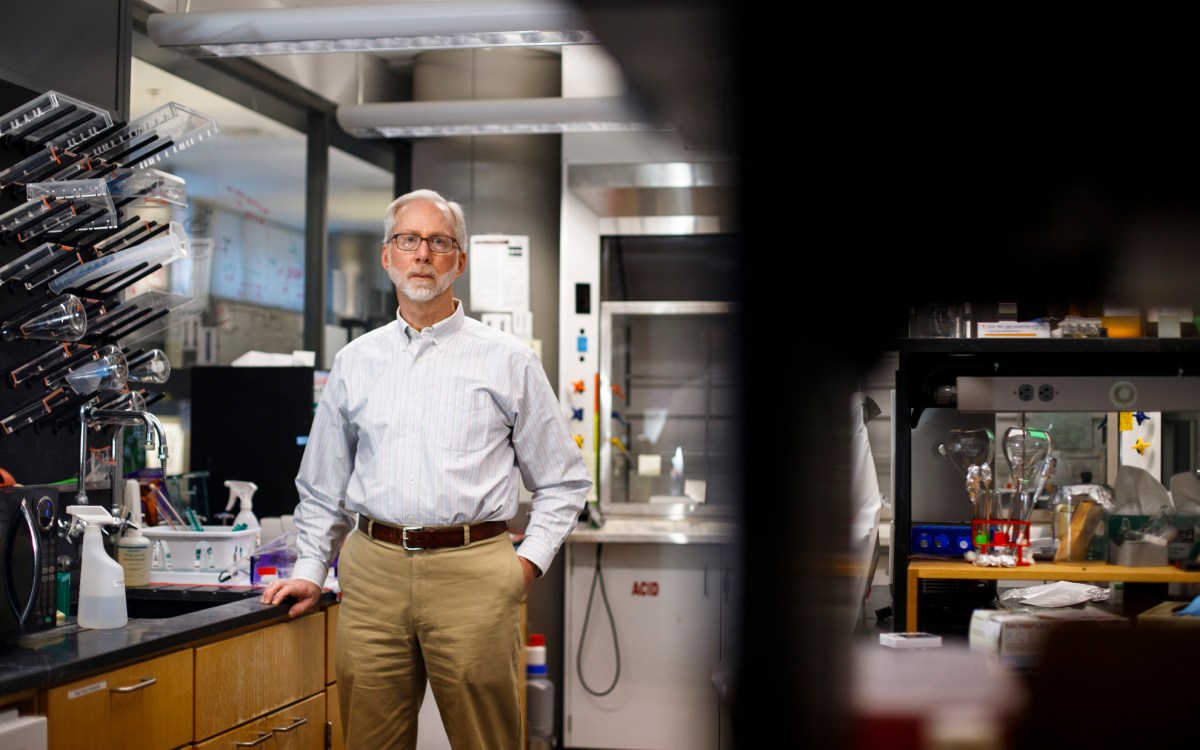

Adam Becker.

Photos by Niles Singer/Harvard Staff Photographer

Tech billionaires promoting space exploration and colonization as the solution to humanity’s problems should be ignored, says Adam Becker.

These CEOs have little to no expertise in engineering or astrophysics and have little to support their arguments beyond money, according to Becker, an astrophysicist, journalist, and author of the new book “More Everything Forever: AI Overlords, Space Empires, and Silicon Valley’s Crusade to Control the Fate of Humanity.”

“They think that money is some sort of metric that tells you how worthwhile somebody is and how smart they are, and that if somebody else has less money, that means that you don’t have to listen to them,” said Becker, who was joined at a recent Harvard Science Book Talk by Moira Weigel, assistant professor of comparative literature and faculty associate at the Berkman Klein Center for Internet and Society, and Max Gladstone, an award-winning science fiction and fantasy writer.

Becker earned a Ph.D. in computational cosmology from the University of Michigan in 2012. He said that as a child he also thought the future was among the stars.

“I didn’t question that assumption for a long time,” he told the audience. “As I got older, I learned more and realized, ‘Oh, that’s not happening. We’re not going to go to space and certainly going to space is not going to make things better.’”

In his book, Becker dissects the “baseless fantasies” promulgated by tech CEO billionaires, futurists, and philosophers. Each chapter features key figures — including philosopher Peter Singer, effective altruist William MacAskill, and futurist and former tech entrepreneur Ray Kurzweil — who believe they can optimize what comes next for humanity. Some, like Space X CEO Elon Musk, have the funds to pursue those beliefs.

“People were taking them seriously. That scares me and also I was deeply annoyed because if you know more about these areas, it becomes clear that they have no idea what they’re talking about.”

Originally, Becker believed the subcultures he focuses on in his book — rationalists, effective altruists, and those who believe in the singularity (the point at which tech essentially blends human and machine) — were “mostly harmless.” That belief changed over time.

“People were taking them seriously. That scares me and also I was deeply annoyed because if you know more about these areas, it becomes clear that they have no idea what they’re talking about,” he said.

Becker alluded to individuals, such as Silicon Valley tech billionaires like Musk and Jeff Bezos, who try futilely to “use their money to run as far as they like from their fears.” They have accumulated wealth because they need control in order to feel safe, he argued.

“You end up accumulating more and more money than you could ever possibly spend in one lifetime and more power than any one person should have,” he said. “That’s not enough and you need more.”

Becker also argued that there is “something very dualist and haunted” about the way the subjects of his book view the mind. Following the death of his father, Kurzweil began to explore the possibility that AI will one day be capable of collecting memories and replicating the human mind.

These people believe that “the body is this thing that the mind is unfortunately dependent upon rather than us being our bodies,” Becker said.

“Our bodies are not like space suits for our nervous systems or for our minds,” he said. “They are what we are and instead, there is this horror with the flesh that is just exuded by all of this rhetoric.”

While answering questions from the audience, Becker reiterated pointed criticisms of tech CEO billionaires who claim to have productive visions of the future.

“I think that they have something that sounds cool, that they believe in, that they think is a vision of the future, because they’re not used to thinking anymore. They’re used to vibing, and this might be why they’re so easily fooled into thinking that ChatGPT and other LLMs are actually thinking as opposed to producing extruded, homogenized-thought like products,” he said.