Harvard Law School’s Bonnie Docherty attended the U.N General Assembly where the first-ever resolution on “killer robots” was adopted.

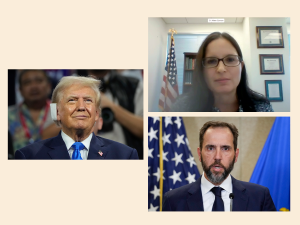

Courtesy of Bonnie Docherty

‘Killer robots’ are coming, and U.N. is worried

Human rights specialist lays out legal, ethical problems of military weapons systems that attack without human guidance

Long the stuff of science fiction, autonomous weapons systems, known as “killer robots,” are poised to become a reality, thanks to the rapid development of artificial intelligence.

In response, international organizations have been intensifying calls for limits or even outright bans on their use. The U.N General Assembly in November adopted the first-ever resolution on these weapons systems, which can select and attack targets without human intervention.

To shed light on the legal and ethical concerns they raise, the Gazette interviewed Bonnie Docherty, lecturer on law at Harvard Law School’s International Human Rights Clinic (IHRC), who attended some of the U.N. meetings. Docherty is also a senior researcher in the Arms Division of Human Rights Watch. This interview has been condensed and edited for length and clarity.

What exactly are killer robots? To what extent are they a reality?

Killer robots, or autonomous weapons systems to use the more technical term, are systems that choose a target and fire on it based on sensor inputs rather than human inputs. They have been under development for a while but are rapidly becoming a reality. We are increasingly concerned about them because weapons systems with significant autonomy over the use of force are already being used on the battlefield.

What are those? Where have they been used?

It’s a little bit of a fine line about what counts as a killer robot and what doesn’t. Some systems that were used in Libya and others that have been used in [the ethnic and territorial conflict between Armenia and Azerbaijan over] Nagorno-Karabakh show significant autonomy in the sense that they can operate on their own to identify a target and to attack.

They’re called loitering munitions, and they are increasingly using autonomy that allows them to hover above the battlefield and wait to attack until they sense a target. Whether systems are considered killer robots depends on specific factors, such as the degree of human control, but these weapons show the dangers of autonomy in military technology.

What are the ethical concerns posed by killer robots?

The ethical concerns are very serious. Delegating life-and-death decisions to machines crosses a red line for many people. It would dehumanize violence and boil down humans to numerical values.

There’s also a serious risk of algorithmic bias, where discriminating against people based on race, gender, disability, and so forth is possible because machines may be intentionally programmed to look for certain criteria or may unintentionally become biased. There’s ample evidence that artificial intelligence can become biased. We in the human-rights community are very concerned about this being used in machines that are designed to kill.

“Delegating life-and-death decisions to machines crosses a red line for many people. It would dehumanize violence and boil down humans to numerical values.”

What are the legal concerns?

There are also very serious legal concerns, such as the inability for machines to distinguish soldiers from civilians. They’re going to have particular trouble doing so in a climate where combatants mingle with civilians.

Even if the technology can overcome that problem, they lack human judgment. That is important for what’s called the proportionality test, where you’re weighing whether civilian harm is greater than military advantage.

That test requires a human to make an ethical and legal decision. That’s a judgment that cannot be programmed into a machine because there are an infinite number of situations that happen on the battlefield. And you can’t program a machine to deal with an infinite number of situations.

There is also concern about the lack of accountability.

We’re very concerned about the use of autonomous weapons systems falling in an accountability gap because, obviously, you can’t hold the weapon system itself accountable.

It would also be legally challenging and arguably unfair to hold an operator responsible for the actions of a system that was operating autonomously.

There are also difficulties with holding weapons manufacturers responsible under tort law. There is wide concern among states and militaries and other people that these autonomous weapons could fall through a gap in responsibility.

We also believe that the use of these weapons systems would undermine existing international criminal law by creating a gap in the framework; it would create something that’s not covered by existing criminal law.

“Most of the countries that have sought either nonbinding rules or no action whatsoever are those that are in the process of developing the technology and clearly don’t want to give up the option to use it down the road.”

There have been efforts to ban killer robots, but they have been unsuccessful so far. Why is that?

There are certain countries who oppose any action to address the concerns these weapons raise — Russia in particular. Some countries, such as the U.S., the U.K., and so forth, have supported nonbinding rules. We believe that a binding treaty is the only answer to dealing with such grave concerns.

Most of the countries that have sought either nonbinding rules or no action whatsoever are those that are in the process of developing the technology and clearly don’t want to give up the option to use it down the road.

There could be several reasons why it has been challenging to ban these weapons systems. These are weapons systems that are in development as we speak, unlike landmines and cluster munitions that had already existed for a while when they were banned. We could show documented harm with landmines and cluster munitions, and that is a factor that moves people to action — when there’s already harm.

In the case of blinding lasers, it was a pre-emptive ban [to ensure they will be used only on optical equipment, not on military personnel] so that is a good parallel for autonomous weapons systems, although these weapons systems are a much broader category. There’s also a different political climate right now. Worldwide, there is a much more conservative political climate, which has made disarmament more challenging.

What are your thoughts on the U.S. government’s position?

We believe they fall short of what a solution should be. We think that we need legally binding rules that are much stronger than what the U.S. government is proposing and that they need to include prohibitions of certain kinds of autonomous weapons systems, and they need to be obligations, not simply recommendations.

There was a recent development in the U.N. recently in the decade-long effort to ban these weapons systems.

The disarmament committee, the U.N. General Assembly’s First Committee on Disarmament and International Security, adopted in November by a wide margin —164 states in favor and five states against — a resolution calling on the U.N. secretary-general to gather the opinions of states and civil society on autonomous weapons systems.

Although it seems like a small step, it’s a crucial step forward. It changes the center of the discussion to the General Assembly from the Convention on Conventional Weapons (CCW), where progress has been very slow and has been blocked by Russia and other states. The U.N. General Assembly (UNGA) includes more states and operates by voting rather than consensus.

Many states, over 100, have said that they support a new treaty that includes prohibitions and regulations on autonomous weapons systems. That combined with the increased use of these systems in the real world have converged to drive action on the diplomatic front.

The secretary-general has said that by 2026 he would like to see a new treaty. A treaty emerging from the UNGA could consider a wider range of topics such as human rights, law, ethics, and not just be limited to humanitarian law. We’re very hopeful that this will be a game-shifter in the coming years.

What would an international ban on autonomous weapons systems entail, and how probable is it that this will happen soon?

We are calling for a treaty that has three parts to it. One is a ban on autonomous weapons systems that lack meaningful human control. We are also calling for a ban on autonomous weapons systems that target people because they raise concerns about discrimination and ethical challenges. The third prong is that we’re calling for regulations on all other autonomous weapons systems to ensure that they can only be used within a certain geographic or temporal scope. We’re optimistic that states will adopt such a treaty in the next few years.