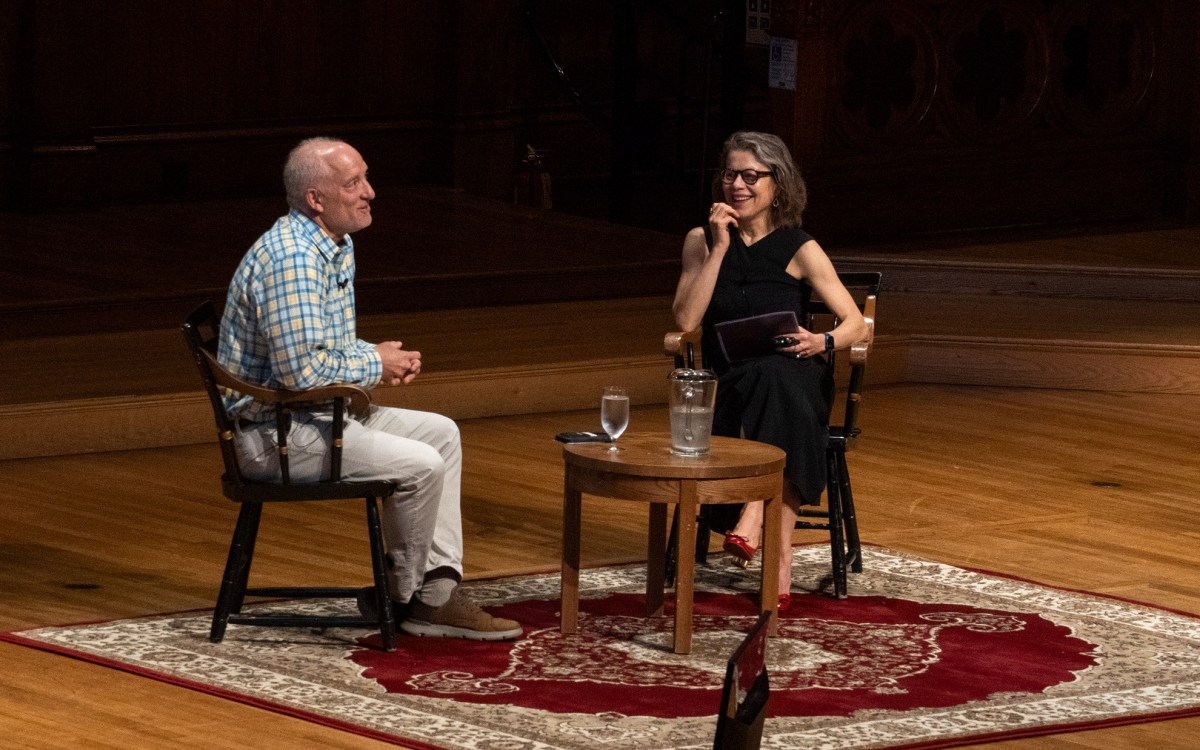

Professor Jelani Nelson founded AddisCoder, a program that teaches students in Ethiopia how to code.

Rose Lincoln/Harvard Staff Photographer

Coding for a cause

Professor Jelani Nelson shows young Ethiopians the opportunities of technology

When he was just 4 years old, Jelani Nelson got his first taste of what computers could do, and it came in the form of an animated plumber named Mario.

For his birthday that year, Nelson’s parents gave him a Nintendo Entertainment System, and he quickly immersed himself in the world of video games. By the time he was 10, Nelson had moved on to PC and online gaming, and it was there that he discovered — nearly by accident — the code that makes the online world work.

“I got into computers through gaming, and I had a computer at home with an internet connection,” he said. “I remember at some point I right-clicked on a webpage on my browser, and saw that it said ‘view source.’ That was how I learned HTML was a thing.”

That discovery, Nelson said, not only set him on the road to teaching himself to code — first in HTML and later in other, more complex languages — but in some ways also put him on the path that eventually led to Harvard.

These days, Nelson is the John L. Loeb Associate Professor of Engineering and Applied Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences, where his work is focused on developing new algorithms to make computer systems work more efficiently. At the end of this academic year, he will leave Harvard to join the faculty of the University of California, Berkeley.

“I’m in the theory of computation group here, and broadly what that means is modeling computation mathematically and proving theorems related to those models,” Nelson said. “So we may want to prove that a particular problem cannot be solved, or that any method that solves a problem has to use at least this much time or memory. Proving that there are methods that are memory- or time-efficient — that’s generally called algorithms.”

Streaming and Sketching

Generally speaking, Nelson’s work is focused on two types of algorithms — streaming and sketching, which are focused on using very little memory.

A basic way to understand streaming algorithms, Nelson said, is to imagine someone reading a list of numbers, then asking for their sum.

“If I ask you at the end to tell me the sum of all the numbers, you can do that by keeping a running sum in your head, but you don’t need to remember every number,” he said. “But then if I ask you to tell me the fifth number, you won’t remember it, because you only kept this running tally. You only used just enough memory to answer a specific type of query.

“That’s a simple example, but there are other situations that are not so simple, where it turns out there are very memory-efficient algorithms that don’t need to remember every item, but can answer nontrivial queries about the past,” he added. “For example, if I’m Amazon and I want to know what were the popular items people bought yesterday between 7 and 8 p.m., I want to be able to answer that without my data structure actually remembering the record of every sale during that time.”

Jelani Nelson first discovered coding as a child and has continued to hone his skills throughout his career.

Rose Lincoln/Harvard Staff Photographer

By comparison, Nelson said, sketching algorithms are designed to let users answer questions about compressed data even if they don’t have access to the original data.

“In the literature, a synonym for a sketch is a synopsis,” he said. “So sometimes you might have multiple sources of data … and you want to be able to make a sketch of one, and a sketch of the other, and then use those sketches to compare them without having access to the original data.”

For a real-world application of such algorithms, Nelson said, consider your spam filter.

“So the idea is that an email is a document, and you can apply a sketching algorithm to it to create a lower-dimensional representation,” he said. “You can then run a machine-learning algorithm on that data. And the idea is that now it is more efficient because it’s working on lower-dimensional data.”

From the Caribbean to Cambridge

While it wasn’t long after first discovering HTML that Nelson set out to teach himself to code, he quickly ran into an obstacle: The only bookstore in his hometown on St. Thomas in the U.S. Virgin Islands was woefully lacking in coding manuals.

“When I was in seventh or eighth grade, we visited the mainland, and I went to a bookstore and bought an HTML book,” he said. “For practice, I wanted to make a website for my little sister. We both liked the [Nickelodeon show] ‘Rugrats,’ and I wanted to put a picture of Tommy on her webpage … but I was afraid of violating copyright, so I called Nickelodeon and asked them permission to include images from ‘Rugrats’ on the site, and they refused. But they took my info, and shortly afterward, we got a box in the mail with a bunch of swag, like VHS tapes and T-shirts and toys.”

At the same time, Nelson said, he had also become deeply interested in math, and began taking part in competitions for middle schoolers run by the Alexandria, Va.–based nonprofit Mathcounts. As a seventh-grader he was one of a handful of students who represented the U.S. Virgin Islands at a national competition held in Washington, D.C.

As a senior in high school, Nelson began to delve deeper into programming. He bought several books on the computer languages C and C++, and applied to MIT after seeing it was rated as the best in the nation for both computer science and mathematics.

“When I got to MIT, I think I was surprised by all the things I didn’t know,” Nelson said. “In my high school, we didn’t have AP classes. … The highest level of math you could take was single-variable calculus, so I just didn’t know what existed in math beyond calculus. I had never really seen creative mathematical problem-solving before, so it was a big wake-up call.”

It was at MIT, first as an undergraduate and later as a grad student, that Nelson began to show off one of his more unusual skills — his blazing fast typing speed. In 2010, he scored the top spot on TyperA, a website that hosts online typing tests, with a staggering 170 words per minute.

“I used it as a procrastination mechanism,” he said. “I would just go and do these typing speed contests, and at some point I got to be at the top of the leaderboard. More recently people have passed me, but I think I’m still in the top five.”

Coding for a cause

Nelson’s educational efforts aren’t limited to the Harvard campus. Shortly after completing his doctorate at MIT in 2011, he founded AddisCoder, an educational program in Ethiopia, where his mother is from, dedicated to teaching coding and algorithms to high school students in Addis Ababa.

“I had graduated in early June, but my postdoc in Berkeley didn’t start until August, so I decided to visit some relatives in Ethiopia,” he said. “And I thought, ‘If I’m going to spend a lengthy amount of time here, what am I going to do besides hang out?’ And I thought, ‘Why don’t I teach a course?’ I ended up with 82 students, and I was the sole lecturer, with one volunteer teaching assistant.”

Working with the Meles Zenawi Foundation, created in honor of the former Ethiopian prime minister, the program taught 175 students in the summer of 2018, coming from public schools across the country. Nearly half of them were female.

“The classes run for four weeks, and each day there is about 90 minutes of lecture, and the rest of the time they’re in the computer lab, solving problems, so it’s very hands-on,” Nelson said. “When I partnered with the Meles Zenawi Foundation in 2016, we had a meeting with the Ministry of Education, and we said, ‘We see no reason why this shouldn’t be balanced; we’re certain you can find very capable women to recruit into the course.’ And they listened. The last two offerings have both included over 40 percent girls. Also, almost all students in the course had never coded before and really didn’t know what computer science was, so the program really helped to evangelize computer science to kids who otherwise might have never been exposed.”

In addition to the classroom time, the program this past summer held a career day, which drew more than a dozen people — including two from Kenya and several from Ethiopia — who work in fields that require knowledge of computer science.

“We wanted them to see that not only are there Facebooks and Googles, but that there are job opportunities locally for them as well,” Nelson said.