Small media, big payback

Study shows that such outlets can have broad impact on the national conversation

With a readership in the millions, The New York Times routinely influences public debate on a host of issues through its news coverage.

Can a small news outlet of perhaps 50,000 circulation do the same thing?

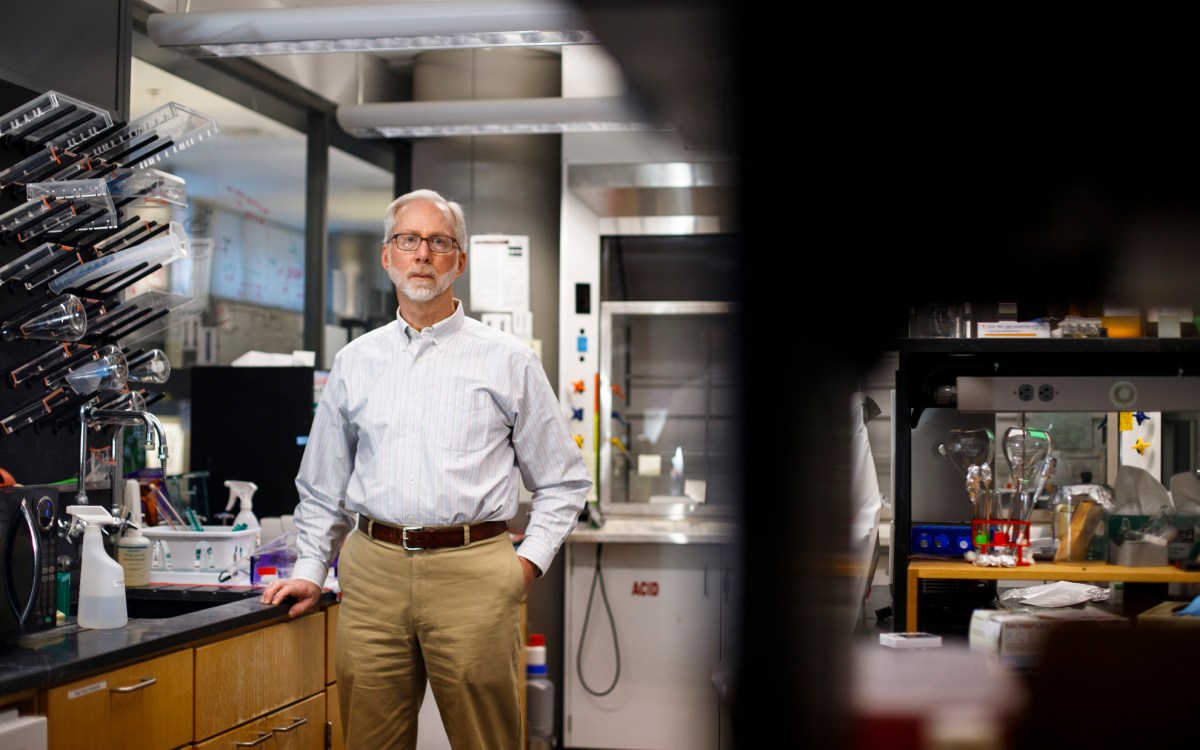

The answer, says Albert J. Weatherhead III University Professor Gary King, is that in an age that relies on internet publication and social-media dispersal, even small- to medium-size media outlets can have a dramatic impact on the content and partisan balance of the national conversation about major public-policy issues.

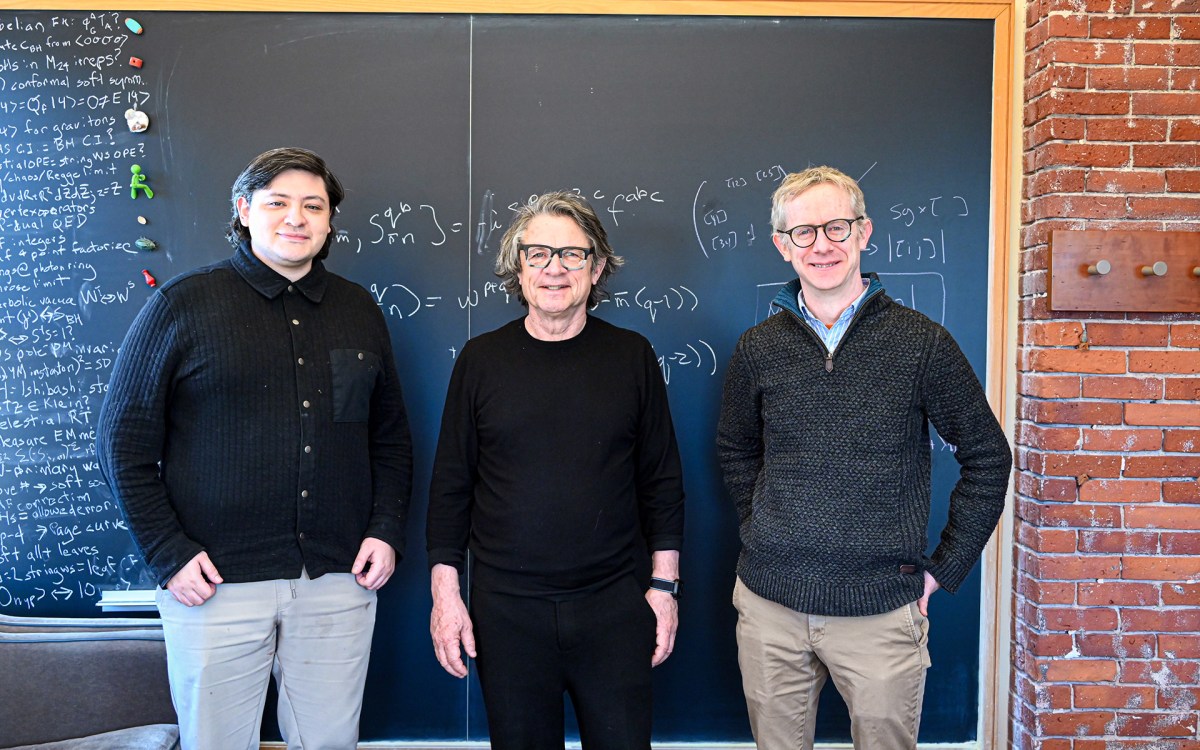

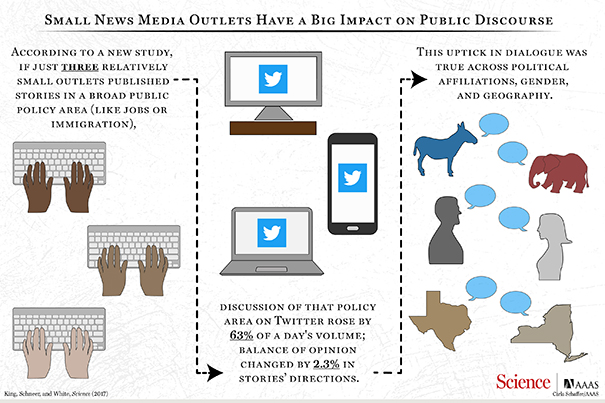

In the first large-scale, randomized media experiment of its kind, King and former students Benjamin Schneer, now an assistant professor at Florida State University, and Ariel White, now an assistant professor at Massachusetts Institute of Technology, found that if just three outlets wrote about a major national policy topic — such as jobs, the environment, or immigration — discussion of that topic across social media rose by more than 62 percent, and the balance of opinion in the national conversation could be swayed by several percentage points.

“For several hundred years, scholars have tried to measure the influence of the media. Most people think it is influential, but measuring this influence rigorously with randomized experiments has until now been impossible,” King said. “Our findings suggest that the effect of the media is surprisingly large. Our study’s implications suggest every journalist wields a major power, and so has an important responsibility.”

Those findings, King said, are the result of more than five years of work, much of it spent convincing 48 news outlets to agree to take part in the study. About half of these outlets were represented by the Media Consortium, a network of independent news outlets that was eager to find a way to measure impact and was willing to help.

“Much of the work leading up to this study involved finding a way to bridge the cultural divide between journalism and science,” King said. “Through years of conversations, much trial and error, and a partnership with Media Consortium Executive Director Jo Ellen Green Kaiser, we learned to understand journalistic standards and practices, and the journalists learned to understand our scientific requirements. What ultimately made it all work was a novel research design we developed that satisfied both camps.”

Though similar efforts have been tried in the past, they invariably collapsed as journalists chafed at the idea of being told what to report and when to report it. To address the problem, previous researchers fell back on clever tricks, such as studying areas that fell outside the broadcast area of a particular outlet but, because no one knew whether the areas were truly random, were hard to evaluate. Such studies faced many problems, particularly their inability to control for a host of factors such as race, education, or income.

“From a scientific point of view, we have to be able to tell the journalists what to publish, and preferably at random times,” King said. “From a journalistic point of view, these scientific requirements seem crazy, and journalists reasonably insist on retaining absolute control over what they publish. The two sets of requirements seem fundamentally incompatible, but we found a way to create a single research design that accomplished the goals of both groups.”

It wasn’t only the participation of news outlets that made the study noteworthy, though.

“If you’re doing something like a medical experiment, you may randomly assign individual people to one of two groups, and then each person is your unit of analysis,” he said. “But when a media outlet publishes something — no matter how small — the potential audience it could impact includes basically everybody in the country. That means our unit of analysis can’t be a person. It has to be the entire country, which greatly increases the cost of the study.” That means that the equivalent of an entire experiment in many other studies constitutes only one observation in this study.

Because collecting each observation was so expensive and logistically challenging, King and colleagues used, and further developed, novel statistical techniques to enable them to collect only as much data as needed. After each massive national experiment they could then examine whether they had amassed enough data to draw reliable conclusions.

“That allowed us to keep going until we got to the point where we had exactly as much data as we needed, and no more,” King said. “As it turns out, we ran 35 national experiments that produced 70 observations.”

To achieve the randomization needed for the study, King’s team, the Media Consortium staff, and journalists at the 48 outlets identified 11 broad policy areas. They then simulated the tendency of journalists to influence each other and publish stories on similar topics, sometimes called “pack journalism,” by choosing three or four outlets from their participating group of 48 to develop stories together that fell into the same broad policy area.

“For example, if the policy area was jobs, one story might be about Uber drivers in the Philadelphia area,” King said. “We would then identify a two-week period where we predicted there wouldn’t be any surprises related to that topic area. So if the president was planning to give a speech about immigration in one of those two weeks, we would not run an experiment on immigration during that time.”

Randomization came from researchers flipping a coin to determine which of those weeks would be the publication week, and which the control week.

“At first, our outlets didn’t really understand what randomization meant,” said Kaiser. “Our project manager, Manolia Charlotin, and the researchers worked very closely with all the outlets to ensure they followed the researchers’ rules. This was a resource-intensive project for us, but the unexpected benefit was that outlets found they also gained many qualitative benefits from collaborating.”

In both treatment and control weeks, King, Schneer, and White used tools and data from the global analytics company Crimson Hexagon to monitor the national conversation in social media posts. (King is a co-founder of Crimson Hexagon; with a previous generation of graduate and postdoctoral researchers, he developed the automated text analysis technology that Harvard licensed to the company.) He explained that this methodology “is used to evaluate meaning in social media posts. So if you have a set of categories you care about, we identify example posts in these categories, which is what humans are good at,” King said. “Then our algorithm can amplify that human intelligence and, without classifying individual posts, can accurately estimate the percent of posts in each category each day.”

What they found, King said, was that the effect was larger than anticipated.

“The actual effect is really big,” King said. “If three outlets (with an average circulation of about 50,000) get together and write stories, the size of the national conversation in that policy area increases a lot. It’s a 62 percent increase on the first day’s volume distributed over the week, just from these three little outlets.

“These national conversations about major policy areas are essential to democracy,” he added. “Today this conversation takes place, in part, in some of the 750,000,000 publicly available social media posts written by people every day — and all available for research. At one time, the national conversation was whatever was said in the public square, where people would get up on a soapbox, or when they expressed themselves in newspaper editorials or water-cooler debates. This is a lot of what democracy is about. The fact that the media has such a large influence on the content of this national conversation is crucial for everything from the ideological balance of the nation’s media outlets, to the rise of fake news, to the ongoing responsibility of professional journalists.”

This research was supported with funding from Voqal and Harvard’s Institute for Quantitative Social Science.