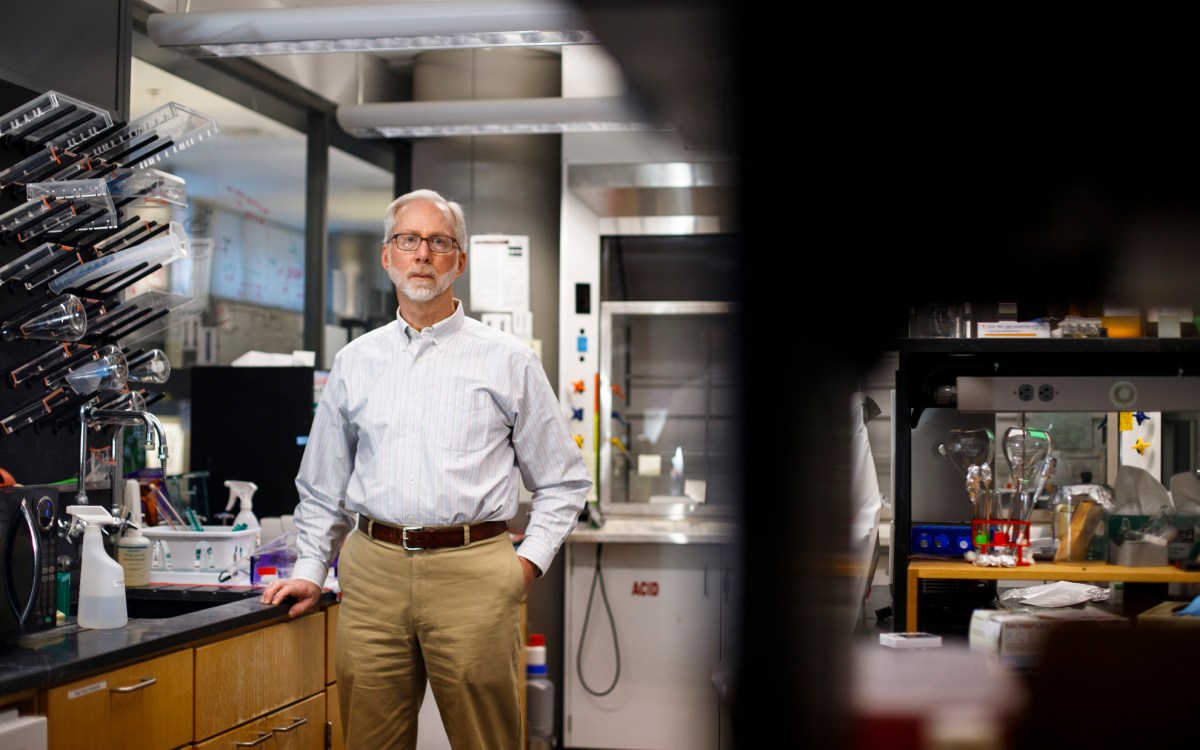

E. Glen Weyl.

Stephanie Mitchell/Harvard Staff Photographer

Rethinking — and reframing — superintelligence

Microsoft researcher says separating AI from people makes systems dangerous and unproductive

The debate over artificial superintelligence (ASI) can tend toward extremes, with predictions that it will either save humanity or destroy it. E. Glen Weyl has a different perspective: Superintelligence is already here, and it has been for thousands of years.

Speaking Nov. 19 at the Berkman Klein Center, Weyl, an economist with Microsoft Research and co-author of the book “Radical Markets,” urged listeners to think about superintelligence in the context of James Lovelock’s “Gaia Hypothesis,” i.e., as a collective self-regulating system that encompasses all living things and the natural environment.

This vision, he said, contrasts with common depictions of ASI as a machine ingesting information and autonomously processing and acting on it — with the ultimate goal of matching or surpassing human capabilities. That framing is more than philosophical, he argued. It changes how engineers build AI systems — and not in a good way.

“When we separate digital systems from people, we make them dangerous because they don’t have the feedback to maintain homeostasis, and also they are not useful because they’re not integrated into production processes and human participation.”

In fact, Weyl said, “Superintelligence is already all around us: corporations, democracies, religions, cultures — all of these things manifest capabilities that humans do not have on their own. We think of artificial superintelligence as something unique. Instead, I’m suggesting that it is another way that allows us to map human social relationships and thereby extend them.”

Religious, governmental, and corporate systems, Weyl said, display superintelligence because they draw on a collective self-awareness to augment human capabilities in ways that allow large communities to achieve goals. They also strengthen cohesion beyond what humans could do individually or in groups.

Weyl pointed to Japan’s Kaizen system, implemented after World War II, as one example. When factory workers received carefully targeted information about the broader production process and their roles within it, they were better able to innovate and improve operations at each individual step. “You can’t educate every line worker about everything that goes into making your car,” Weyl explained, “so you have to take a bet on what is the most important and relevant information.”

Core to Weyl’s conception of superintelligence is the idea of common knowledge — information that, in Weyl’s words, “not only do I know and you know, but that I know that you know, and I know that you know that I know — and so on.” He offered “The Emperor’s New Clothes” as an example; every individual knows that the emperor is naked, but only when a child laughs publicly does everyone realize everyone is seeing the same thing.

Social media and modern conceptions of superintelligence undermine this shared knowledge, Weyl argued. In China, he said, government censorship of online spaces makes it difficult for communities to coalesce around common knowledge. In Western cultures, social media has exacerbated polarization by privileging divisive voices.

Some countries, though, have taken a humanistic approach toward decreasing polarization and increasing understanding. He cited tools established by the Taiwanese government to help ease divisions, including Polis, which shows users how social media posts fit into different ideological clusters and details similarities between seemingly disparate ideas. “This is kind of a vaccine, or cure, to a polarization attack,” said Weyl.

In a post-talk discussion with Moira Weigel, an assistant professor of comparative literature and a Berkman Klein faculty associate, Weyl elaborated on how he defines intelligence for technological systems. It’s more important for systems to monitor their own functioning and respond to incoming information with an internal logic than to simply complete a task in a human-like way, he said.

When thinking about whether the East or West would “win” the AI battle, Weyl rejected that framing as mostly irrelevant. “If you’re racing to smash into a wall, the person who wins is the person who swerves at the end rather than the person who hits the wall,” he said.

In the end, Weyl said, it’s less about the strength of the system and more about how it adapts.