Photo illustration by Liz Zonarich/Harvard Staff

Is AI dulling our minds?

Experts weigh in on whether tech poses threat to critical thinking, pointing to cautionary tales in use of other cognitive labor tools

A recent MIT Media Lab study reported that “excessive reliance on AI-driven solutions” may contribute” to “cognitive atrophy” and shrinking of critical thinking abilities. The study is small and is not peer-reviewed, and yet it delivers a warning that even artificial intelligence assistants are willing to acknowledge. When we asked ChatGPT whether AI can make us dumber or smarter, it answered, “It depends on how we engage with it: as a crutch or a tool for growth.”

The Gazette spoke with faculty across a range of disciplines, including a research scientist in education, a philosopher, and the director of the Derek Bok Center for Teaching and Learning, to discuss critical thinking in the age of AI. We asked them about the ways in which AI can foster or hinder critical thinking, and whether overreliance on the technology can dull our minds. The interviews have been edited for length and clarity.

Tina Grotzer.

Veasey Conway/Harvard Staff Photographer

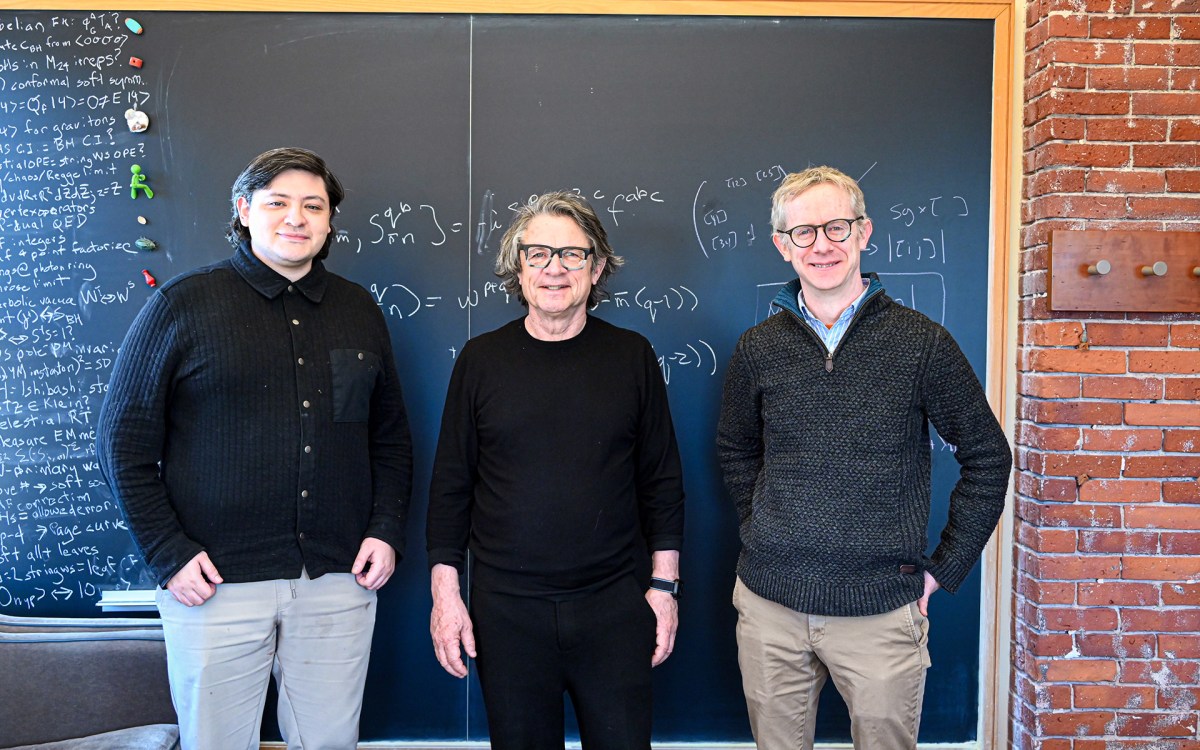

We’re better than Bayesian

Tina Grotzer

Principal Research Scientist in Education, Graduate School of Education

Many students use AI without a good understanding of how it works in a computational/Bayesian sense, and this leads to putting too much confidence in its output. So, teaching them to be critical and discerning about how they use it and what it offers is important. But even more important is helping them understand how their embodied human minds work and how powerful they can be when used well.

The work in neuroscience makes a compelling case that, while human minds are computational and use Bayesian processes, they are “better than Bayesian” in many ways. For instance, the work of Antonio Damasio and others highlights how our somatic markers enable us to make quick, intuitive leaps. Research from my lab found that kindergarteners used strategic information in playing a game that enabled them to make informed moves more quickly than a purely Bayesian approach. Further, our human minds can detect critical distinctions or exceptions to covariation patterns that drive conceptual change and model revisions that a purely Bayesian approach would sum across. This is just the tip of the iceberg in terms of how human minds are more powerful than AI. There are many other examples (for instance, that while AI can offer analogies, to my knowledge, it can’t reason analogically).

In my “Becoming an Expert Learner” course, I aim to help students consider the wealth of research on how human minds work so that they can make the best use of their particular mind (with its normative and non-normative characteristics). Then I ask them to compare to AI to think carefully about when and how they decide to use each. I hope it leads them to a fuller appreciation for their incredible minds and abilities!

Dan Levy.

Photo courtesy of Dan Levy

The assignment is not the ultimate goal

Dan Levy

Senior Lecturer in Public Policy, Harvard Kennedy School; co-author, “Teaching Effectively with ChatGPT”

In the book I wrote with Ángela Pérez Albertos, we highlight that there’s no such thing as “AI is good for learning” or “AI is bad for learning.” I think AI can be used in ways that are good for learning and it can be used in ways that hinder learning.

If a student uses AI to do the work for them, rather than to do the work with them, there’s not going to be much learning. No learning occurs unless the brain is actively engaged in making meaning and sense of what you’re trying to learn, and this is not going to occur if you just ask ChatGPT, “Give me the answer to the question that the instructor is asking.”

At the end of the day, if you think you’re in school to produce outputs, then you might be OK with AI helping you produce those outputs. But if you’re in school because you want to be learning, remember that the output is just a vehicle through which that learning is going to happen. The output is typically not the ultimate goal. When you confuse those two things, you might use AI in ways that are not conducive to learning. AI can also hinder learning when students are overcommitted, overworked, and see AI exclusively as a time-saving device. But if AI can save you time doing the grunt work so you can devote that time to do more serious learning, I think that is a plus.

There are reasons to be optimistic and there are reasons to be worried about AI, but AI is here to stay, so it’s not like we can say, “OK, forget about AI.” We’d better figure out ways of collaborating with it and leveraging it in ways that advance our goals as educators, learners, and humans.

Christopher Dede.

Niles Singer/Harvard Staff Photographer

An owl on your shoulder

Christopher Dede

Senior Research Fellow, Graduate School of Education

Athena, the Greek goddess of wisdom, is always portrayed with an owl on her shoulder. We now should ask, “Can AI be like the owl that helps us be wiser?”

I think the key to having the owl be a positive force instead of a negative one is not to let it do your thinking for you. We know that generative AI doesn’t understand the human context, so it’s not going to provide wisdom about social, emotional, and contextual events, because those are not part of its repertoire. However, GenAI is very good at absorbing large amounts of data and making calculative predictions in ways that can augment your thinking.

The contrast for me is between doing things better and doing better things. Ninety-five percent of what I read about AI in education is that it can help us do things better, but we should also be doing better things. One of the traps of GenAI, even when you’re using it well, is that if you’re using it just to do the same old stuff better and quicker, you have a faster way of doing the wrong thing.

If AI is doing your thinking for you, whether it’s through auto-complete or whether it’s in some more sophisticated ways, as in “I’d let AI write the first draft, and then I’ll just edit it,” that is undercutting your critical thinking and your creativity. You may end up using AI to write a job application letter that is the same as everybody else’s because they’re also using AI, and you may lose the job as a result. You always have to remember that the owl sits on your shoulder and not the other way around.

Fawwaz Habbal.

Stephanie Mitchell/Harvard Staff Photographer

Only humans can solve human problems

Fawwaz Habbal

Senior Lecturer on Applied Physics, John A. Paulson School of Engineering and Applied Sciences

The course “AI & Human Cognition” I teach aims to demystify AI, differentiate between human and machine intelligence, and explore the foundations of AI and how to use it effectively.

While AI excels in data processing and statistics, it lacks the ability to create truly innovative and creative solutions; machines calculate and they do not have human experiences. We have to remember that although AI machines work on sophisticated statistics, advanced mathematics, and use very fast electronic chips that operate at mind-boggling computational speeds, they rely on data that has been created by humans, and that data is the same, more or less, across the different AI platforms. When you ask a question to different AI platforms, most of the time their answers are very similar because the database is the same. AI can tell you how to put things together, but AI would not be able to help you build a device that relates to a human context. Machine learning depends on statistical adjustments, whereas humans self-organize life in relation to meaning.

AI can engage in processes that resemble critical thinking — data analysis, problem-solving, and modeling — but it has limitations. Critical thinking requires the human experience, the human insight, and ethics and moral reasoning. Machines today lack all of that, and their processes are only recursive.

I worry about students relying too much on AI. We have to remind students that we’re trying to help them become the future leaders of society, and part of developing leadership is to add new value to society; and that is a human enterprise. I haven’t seen AI do a really good system analysis and deep critical thinking. Today, at least, I find it very difficult to imagine that AI can have reflective thinking. We have to be careful not to think AI is going to solve our problems. Human challenges are complex and can be solved only by humans.

Karen Thornber.

Stephanie Mitchell/Harvard Staff Photographer

Taking shortcuts without knowing the map

Karen Thornber

Harry Tuchman Levin Professor in Literature and Professor of East Asian Languages and Civilizations; Richard L. Menschel Faculty Director at the Derek Bok Center for Teaching and Learning

AI is forcing us to think differently about the various components of critical thinking. For instance, AI can be a helpful partner in analyzing and inferring, as well as with certain types of problem-solving, but it’s not always that successful at evaluating, and reflecting can’t (yet) be outsourced to AI.

It’s certainly possible to use AI in ways that diminish some of our skills, both lower-order capacities such as memory and factual knowledge as well as higher-order skills such as critical thinking. Just as turn-by-turn navigation systems have led to many us of knowing the streets of the city in which we currently live in far less detail than the streets of cities we learned before smartphones and car-based GPS systems were widely available, it’s likely that the ease of using LLMs will enable us to avoid engaging in certain challenging mental skills, and it will be difficult to persuade students to develop these skills in the first place.

The key is to use AI to assist us in learning and in thinking critically and, in the words of the American Historical Association’s recent “Guiding Principles for Artificial Intelligence in History Education,” “to support the development of intentional and conscientious AI literacy.” Some critical thinking skills will become more valuable because they cannot (yet) be outsourced to AI. The proliferation of “cheap intelligence” (more code, text, and images than ever before) means that the skills of discernment, evaluation, judgment, thoughtful planning, and reflection are even more crucial now than before.

Jeff Behrends.

Veasey Conway/Harvard Staff Photographer

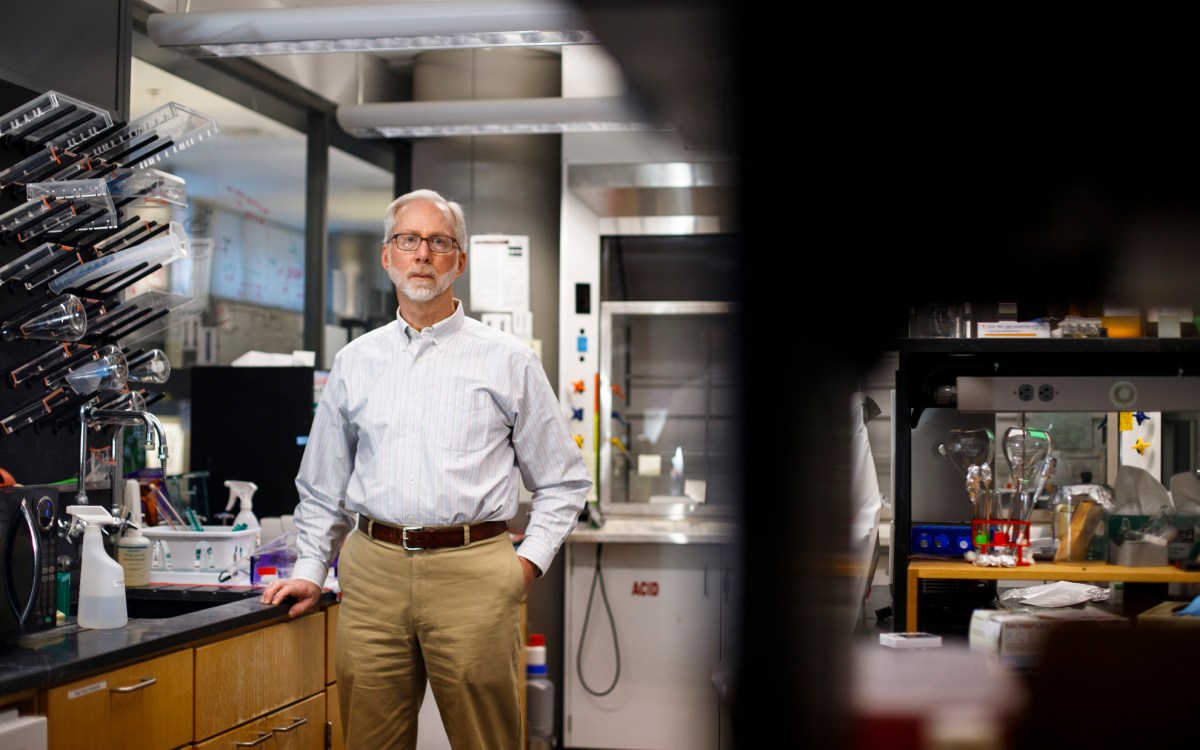

Cautionary tales from use of other cognitive labor tools

Jeff Behrends

Senior Research Scholar and Associate Senior Lecturer, Department of Philosophy

I am very worried about the effects of general-use LLMs on critical reasoning skills. We already know that the tools we’re using during cognitive labor can change the ways that we do that work. We know, for example, that taking notes longhand leads to greater recall than taking notes by keystroke, and that predictive text features built into word processors and email interfaces change our word choices. Given these kinds of trends, I’d be stunned if frequent, multi-context use of LLMs didn’t lead to real changes in the way that users approach reasoning tasks.

The recent study from the MIT Media Lab provides at least some initial evidence for that. I am less worried about AI as an aid to guide expert-level reasoning in targeted domains, as when, for example, a doctor might use AI in diagnosis to ensure that she hasn’t overlooked an unusual disease. But the problem is that there is too much hype surrounding LLMs as general reasoners upon which we can (at least partially) offload our thinking about any topic whatsoever. It’s in the interest of people producing the technology to make us think that its possibilities are limitless and that it will usher in a wonderful new future for everyone. We should be cautious before we too enthusiastically pin our hopes on the latest technological trend.