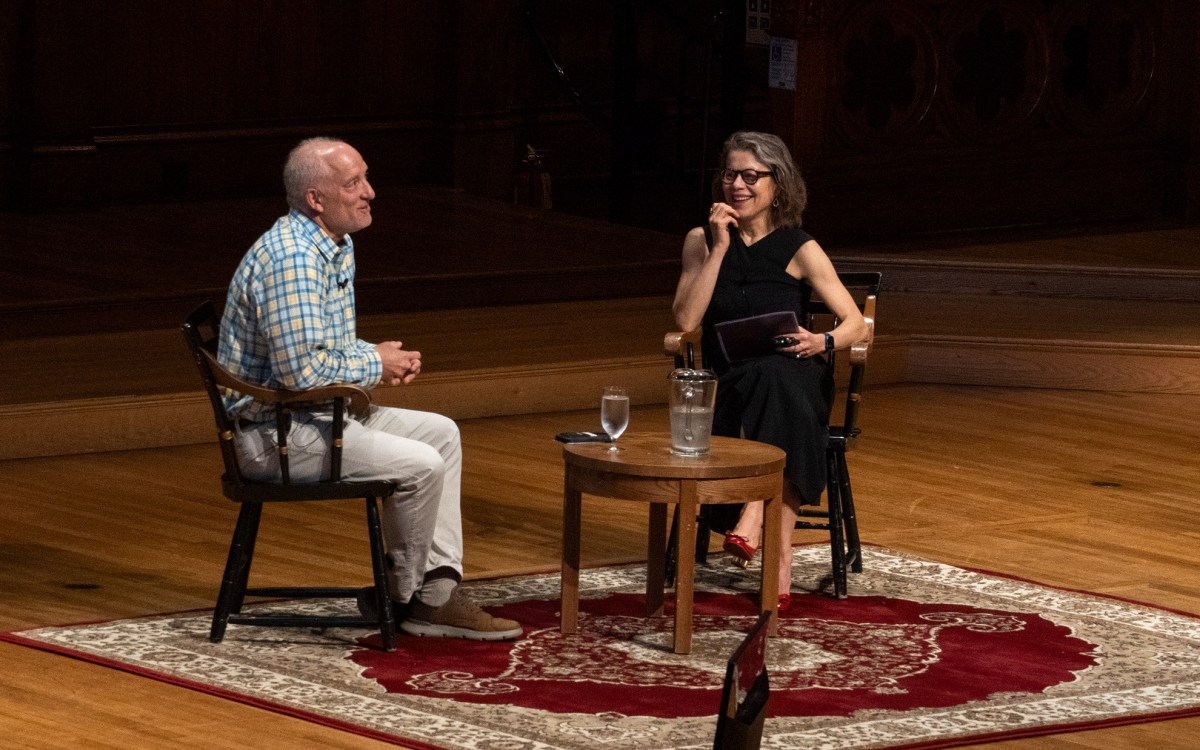

Chris Stubbs (far left) and Logan McCarty co-teach the new undergraduate GenEd class that looks at the technical, ethical and philosophical issues facing the world as it embraces generative AI large language models like ChatGPT inside Maxwell Dworkin. Harvard College student, Jonathan Schneiderman, (green shirt) asks a question during class. Kris Snibbe/Harvard Staff Photographer

Photos by Kris Snibbe/Harvard Staff Photographer

Around the time he was preparing to co-teach a new course on artificial intelligence, Harvard science Assistant Dean Logan McCarty read the 19th-century horror classic “Frankenstein,” without an inkling of its potential relevance to the task.

“In 1818, Mary Shelley created a concept of an artificial intelligence, and she thought a lot about the downsides of creating this kind of superhuman intelligence,” said McCarty, an instructor in physics and chemistry. “That’s what initially captivated me about this reading.”

So he put it on the syllabus. Students now enrolled in “Rise of the Machines? Understanding and Using Generative AI” are reading Shelley as they learn to embrace, however cautiously, powerful, large language model-based products like ChatGPT, Dall-E, Midjourney, and others.

Equating AI chatbots to hideous monsters is probably a stretch, McCarty admits. But there’s no question these quickly expanding technologies are set to unleash momentous changes in education, research, art, and knowledge itself. It’s this irreversible tide that McCarty and co-instructor Christopher Stubbs, Harvard’s dean of science, want students in the new course to grapple with as they explore the technical, legal, moral, and philosophical challenges generative AI is forcing us all to face.

“Given that these tools can do pretty much any homework set we’ve ever assigned, we need to be attentive and adaptive,” said Stubbs, who conceived the idea for the course soon after OpenAI released ChatGPT more than a year ago. “But on a more positive note, students are going into a workforce where this will be an integral part of what they do. Teaching our students to be knowledgeable, ethical, AI-literate, appropriately skeptical users, I think, should be a major objective for a Harvard College undergraduate education.”

Teaching our students to be knowledgeable, ethical, AI-literate, appropriately skeptical users, I think, should be a major objective for a Harvard College undergraduate education

Neither Stubbs, a physicist who will return to his home department this summer after completing his tenure as dean, nor McCarty lay claim to expertise in building or training large language models or neural networks. Designing the course as a General Education offering was intentional. No background in computer science or AI is required — “just a sense of curiosity and spirit of adventure,” according to the syllabus.

“We are all novices and exploring,” said McCarty, who has previously offered courses with similar broad appeal, including one he co-taught with biology instructor Andrew Berry called “What is Life? From Quarks to Consciousness.”

First-year Andrew Abler, who plans to double concentrate in computer science and economics, was squarely in the course’s target demographic. “The main reason I took this course was because I was interested in AI, but I never really knew how it worked,” said Abler, who is considering a career in machine learning. “A class like this that’s not so technically hard, but is just for learning the basic concepts, is a good indicator of if I want to go further into it.”

“Rise of the Machines” is divided into weekly modules that crisscross major AI-related themes: ethical considerations and academic integrity; fiction, movies, and music; and implications for creative fields in the humanities. One class focused on the word “generative,” with McCarty providing an overview and history of artificial intelligence built on neural networks that “learn,” as ChatGPT does, by being fed large sums of data, and outputting responses based on the training it’s received from those vast inputs. He also gave a crash course on image generation via diffusion models, like those used by Dall-E and Google’s ImageFX.

Students in “Rise of the Machines” are encouraged to lean into and understand how generative AI technologies work, as they change and become more powerful, seemingly week to week.

Senior chemistry and economics concentrator Kate Guerin ’24 wrote her midterm paper on exploring the psychological effects of “deep fakes,” made all the more common by AI image and video generation.

“We are explicitly encouraged to use AI to help us brainstorm or even write essays,” Guerin said. “The professors are daring us to balance our time conducting independent research and writing with organically exploring effective AI prompting techniques to improve the writing.”

Susannah Su ’25, a junior in applied mathematics and concurrent master’s student in computational science and engineering, is similarly energized by the skill-building the professors are emphasizing.

“I think large language models will require students to be more critical thinkers and be able to see bigger pictures, instead of just remembering equations and formulas or robotically calculating things,” Su said.

Nikola Jurkovic ’25 is taking the course as part of his special concentration in artificial intelligence and society. He expects AI to have reverberations for decades to come — “as large as the impact of the industrial and agricultural revolutions, or even larger,” he mused.

He’s especially compelled to become fluent in AI safety, which is the idea of reducing catastrophic risks posed by advances in the technologies — from misuse by bad actors, to misalignment by autonomous systems that carry unintended consequences.

“I expect that within a decade or two, AI systems will be developed that are as capable, or more capable, than humans at most types of cognitive labor,” Jurkovic said. “At that point, unless a significant amount of technical research and policy work is done in advance, the chance of such a catastrophic outcome will be alarmingly high.”

The class has attracted attention outside the undergraduate populace. About 20 mid-career professionals in the Nieman Visiting Fellows journalism program and the Harvard Advanced Leadership Initiative are auditing the course.

Nieman Fellow Rachel Pulfer is using the course as a springboard for understanding AI’s influences — good and bad — on the field of journalism. “The Nieman lab has done some really interesting journalism looking at the potential of these models to help journalists — not to spit out ChatGPT-generated articles, but rather, to help with research, or with analysis of large data sets,” Pulfer said.

Seeing around the corner on long-term effects is difficult, but history contains some indicators. Ann Blair, Carl H. Pforzheimer University Professor in the Department of History, is scheduled to lecture on the 15th-century advent of the printing press and the profound changes it wrought in knowledge dissemination.

For McCarty, the analogy to Mary Shelley’s monster holds, in that generative AI combines several components of a world-changing moment: a rapid, vast improvement in information processing and distribution, like the printing press; the dethroning of humans as being cognitively unique and creative; and the extreme thought experiment of AI ending humanity in a catastrophic manner — not unlike the fears nuclear weapons ushered in during the mid-20th century.

“For me, ‘Frankenstein’ and its themes sit at the heart of one of those key transitions in history,” McCarty said.