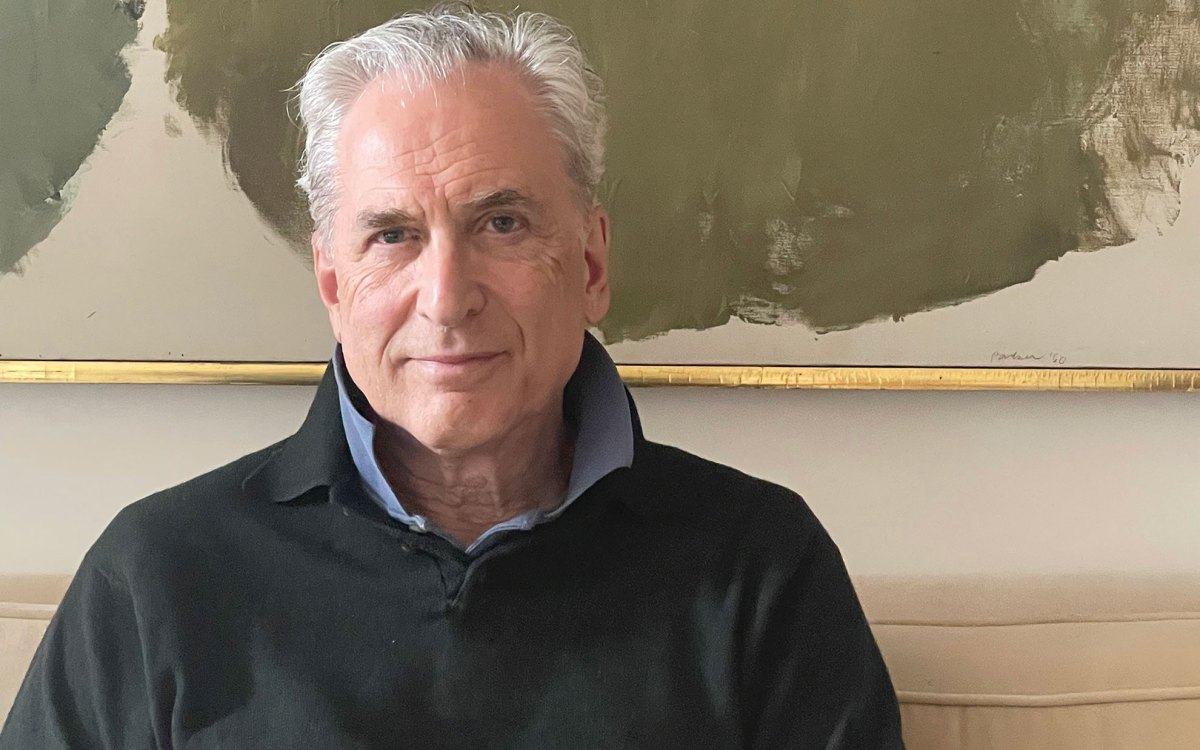

“We have come to expect more from technology and less from each other, and now we are so much further along this path of being satisfied with less,” said MIT Professor Sherry Turkle, who gave the keynote at Conference on AI & Democracy.

Kris Snibbe/Harvard Staff Photographer

Why virtual isn’t actual, especially when it comes to friends

Tech, society expert Turkle warns growing number of chatbots for companionship isn’t good for individuals, or democracy

It can help clean a house, drive a car, write a school essay, help read a CT scan. It can also promise to be a good friend.

But MIT Professor Sherry Turkle advises drawing a hard line on artificial-intelligence applications on that last one.

Turkle, a pioneer in the study of the impact of technology on psychology and society, says a growing cluster of AI personal chatbots being promoted as virtual companions for the lonely poses a threat to our ability to connect and collaborate in all aspects of our lives. Turkle sounded her clarion call last Thursday at the Conference on AI & Democracy, a three-day gathering of experts from government, academia, and the private sector to call for a “movement in the effort to control AI before it controls us.”

“We have come to expect more from technology and less from each other, and now we are so much further along this path of being satisfied with less,” said Turkle, the Abby Rockefeller Mauzé Professor of the Social Studies of Science and Technology in the Program in Science, Technology, and Society at MIT.

Technology can change people’s lives and make them more efficient and convenient, but the time has come to shift our focus from what AI can do for people and instead reflect on what AI is doing to people, said Turkle during her keynote address. Social media has already altered human interactions by producing both isolation and echo chambers, but the effects of personal chatbot companions powered by AI can lead to the erosion of people’s emotional capacities and democratic values, she warned.

“As we spent more of our lives online, many of us came to prefer relating through screens to any other kind of relating,” she said. “We found the pleasures of companionship without the demands of friendship, the feeling of intimacy without the demands of reciprocity, and crucially, we became accustomed to treating programs as people.”

In her work Turkle has researched the effects of mobile technology, social networking, digital companions such as Aibo, a robotic dog, and Paro, a robotic baby harp seal, and the latest online chatbots such as Replika, which bills itself as the “AI companion who cares.”

Turkle has grown increasingly concerned about the effects of applications that offer “artificial intimacy” and a “cure for loneliness.” Chatbots promise empathy, but they deliver “pretend empathy,” she said, because their responses have been generated from the internet and not from a lived experience. Instead, they are impairing our capacity for empathy, the ability to put ourselves in someone else’s shoes.

“What is at stake here is our capacity for empathy because we nurture it by connecting to other humans who have experienced the attachments and losses of human life,” said Turkle. “Chatbots can’t do this because they haven’t lived a human life. They don’t have bodies and they don’t fear illness and death … AI doesn’t care in the way humans use the word care, and AI doesn’t care about the outcome of the conversation … To put it bluntly, if you turn away to make dinner or attempt suicide, it’s all the same to them.”

Artificial intimacy programs derive some of their appeal from the fact that they come without the challenges and demands of human relationships. They offer companionship without judgment, drama, or social anxiety, she said, but lack genuine human emotion and offer only “simulated empathy.” she said. Also troubling is that those programs condition people to be less willing to feel vulnerable or respect the vulnerability of others.

“Human relations are rich, demanding and messy,” said Turkle. “People tell me they like their chatbot friendship because it takes the stress out of relationships. With a chatbot friend, there’s no friction, no second-guessing, no ambivalence. There is no fear of being left behind … All that contempt for friction, second-guessing, ambivalence. What I see is features of the human condition, but those who promote artificial intimacy see as bugs.”

The effects of social media and AI can also be felt in societal polarization and the erosion of civic and democratic values, said Turkle. Experts need to raise the alarm on the perils that AI represents to democracy. Virtual reality is friction-free, and democracy depends on embracing friction, said Turkle.

“Early on, [Silicon Valley companies] discovered a good formula to keep people at their screens,” said Turkle. “It was to make users angry and then keep them with their own kind. That’s how you keep people at their screens, because when people are siloed, they can be stirred up into being even angrier at those with whom they disagree. Predictably, this formula undermines the conversational attitudes that nurture democracy, above all, tolerant listening.

“It’s easy to lose listening skills, especially listening to people who don’t share your opinions. Democracy works best if you can talk across differences by slowing down to hear someone else’s point of view. We need these skills to reclaim our communities, our democracies, and our shared common purpose.”