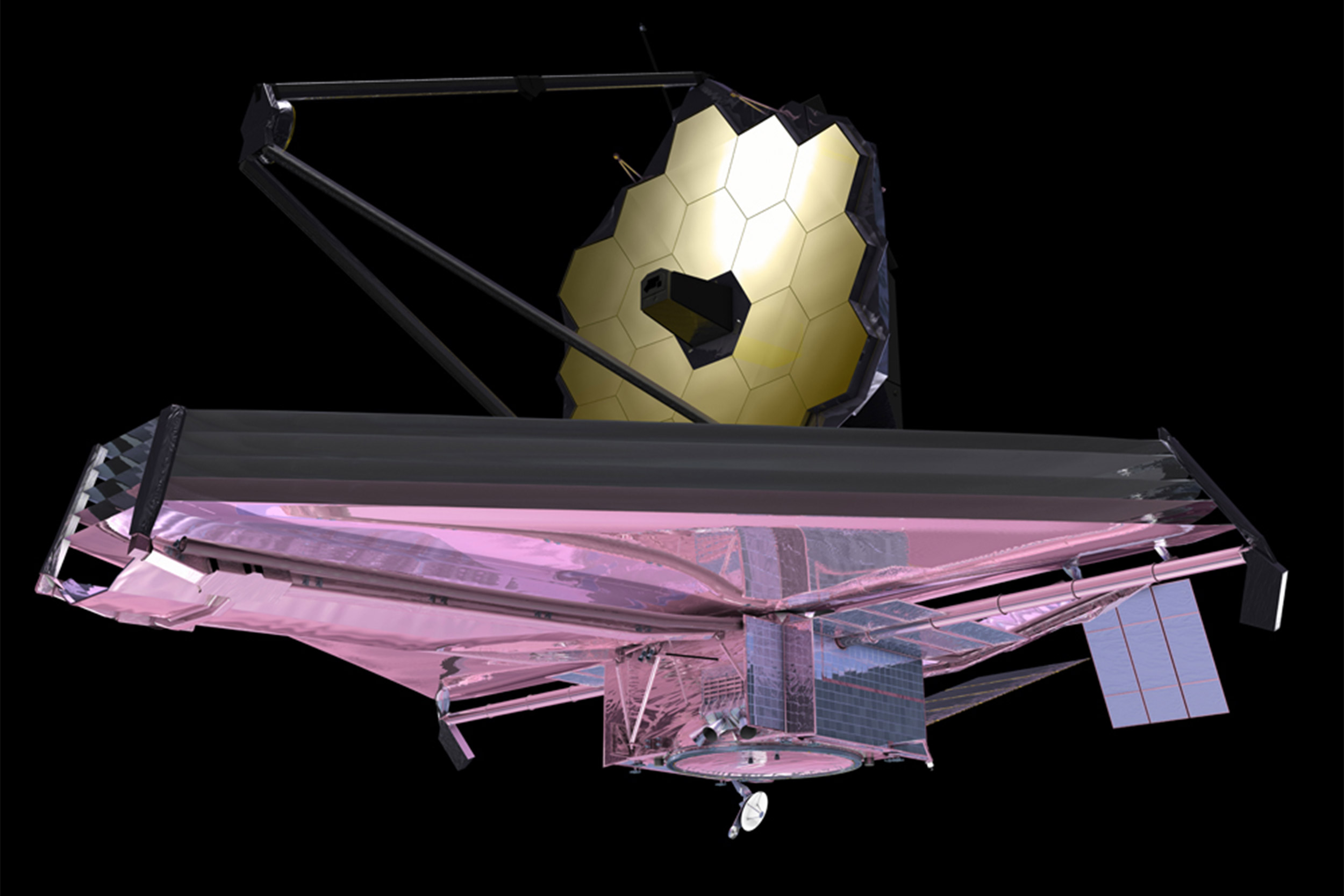

Artist’s conception of the James Webb Space Telescope.

Credit: NASA

Might be a balmy paradise. Might be a face-melting wasteland.

Models scientists use to predict exoplanet atmospheres no match for extraordinary precision of Webb telescope, study says

NASA’s $10 billion James Webb Space Telescope is expected to tell the story of the universe with unprecedented clarity over the next decade. But what if we misread the details?

In a study published in Nature Astronomy, researchers from Harvard and MIT warn that the models astronomers use to decode light-based signals from the atmospheres of exoplanets may not be precise enough to accurately represent the data the new telescope is capturing. They say that if these models aren’t improved, the tools will run into an accuracy wall and, as a result, calculations on planetary properties such as temperature, pressure, and elemental composition could be off by an order of magnitude.

“What we have to do is simulate the atmosphere with our computational models and compare that to the reality of what JWST sees on these planets, but if our models are incomplete or incorrect, then you can imagine that this comparison of the model to reality won’t quite work and will lead to incorrect interpretations,” said Clara Sousa-Silva, an assistant physics professor at Bard College and a former fellow at the Center for Astrophysics | Harvard & Smithsonian, where much of the research took place.

“Our study does show that if we want to maximize the number and the quality of these insights that we can get from the amazing JWST data, then we still have a lot of work to do on Earth because there’s just no standardized, foolproof way to interpret our observations of alien atmospheres,” Sousa-Silva added.

CfA scientists Iouli Gordon, Robert J. Hargreaves, and Roman V. Kochanov also worked on the study. It was led by Prajwal Niraula and Julien de Wit of MIT.

The scientists say the problem lies with the opacity models astronomers use to describe and predict the composition of exoplanet atmospheres. The process starts with starlight. As a planet passes its star, stellar light passes through its atmosphere. Observatories such as the Webb measure this light, absorbing specific colors and wavelengths that correspond to different atoms and molecules in the atmosphere.

Astronomers break down the first layer of this data to see if something like water vapor is present. Then come opacity models, which measure how light interacts with matter to reveal atmospheric properties. This is where researchers detected the problem.

When they mocked up levels of data the Webb might collect on exoplanets and ran them through the most commonly used opacity models, they found that the models weren’t up to par with the Webb’s advanced precision.

The opacity models produced figures on atmospheric conditions that were deemed “good fits” with the data but could result in multiple interpretations. The researchers found measurements were off by about 0.5 to 1 dex, otherwise known as an order of magnitude, a number multiplied to the tenth power. They say this creates an incredible range of possibilities, and current models can’t distinguish those that are accurate or wrong.

For example, one group could determine a planet’s temperature is about 80 degrees F, a balmy paradise. Another group, looking at that same planet, could interpret the data to say the planet is a scorching wasteland at 572 degrees F. The current models also wouldn’t be able to tell whether a planet’s atmosphere is 5 or 25 percent water.

The implications of misinterpretations like this could make the difference in determining whether an exoplanet could support life.

The paper provides some ideas for refining current models or creating better ones, but none are ready to go. To get there, the researchers say it will require gathering much more Webb planetary atmosphere measurements, and a lot of laboratory and theoretical work carrying out new measurements and calculations to refine our understanding of how light interacts with various molecules.

“These data will then have to be validated and disseminated through spectroscopic databases,” Gordon said. “This will take a few years, but it is definitely a feasible solution.”