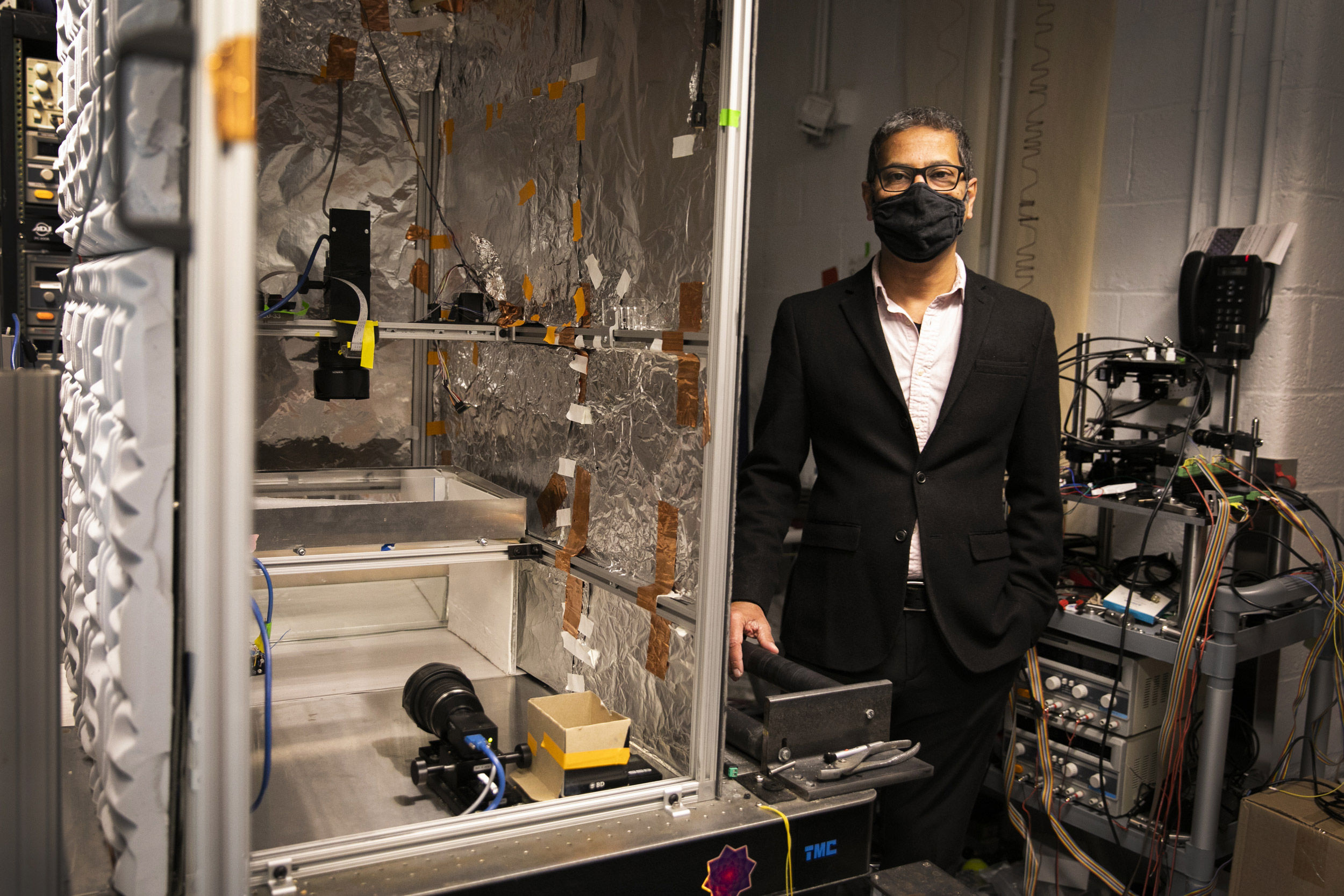

Probing the complexities of the brain is an AI field Venkatesh Murthy has already explored in his research on how the brain processes odor.

Stephanie Mitchell/Harvard Staff Photographer

University seen as well-equipped to meet goals of ambitious institute

Initiative at intersection of artificial, natural intelligence will benefit from existing breadth, experience, scholars say

The mission of the new Kempner Institute for the Study of Natural and Artificial Intelligence, which was announced by Harvard and the Chan Zuckerberg Initiative this week, is undeniably ambitious, perhaps even aggressively so. It aims to seek the roots of intelligence, both artificial and natural, and use what is learned from each to inform advances in the other.

“I think that the ultimate goal is very, very hard,” said Zak Kohane, head of Harvard Medical School’s Department of Biomedical Informatics and a member of the new institute’s steering committee. “It’s a reach goal, but I think that by going for the reach, we will bring the state of the art considerably upwards.”

Francesca Dominici, Clarence James Gamble Professor of Biostatistics, Population, and Data Science at the Harvard T.H. Chan School of Public Health and co-director of Harvard’s Data Science Initiative, agrees, but also says that the University is well-equipped to take it on.

“The institute has a very ambitious goal: ‘to reveal the fundamental mechanisms of intelligence and to endow artificial intelligence with features of natural intelligence, and to apply these new AI technologies for the benefit of humanity,’” said Dominici, a member of the new institute’s steering committee. “So, you need expertise in neuroscience, cognitive science, biology, psychology, social science, behavioral science, and to bring people together to develop new theories. Then, we are trying to study the brain, figure out the brain, figure out behavior, figure out cognitive science, endow new algorithms, develop new algorithms with features of natural intelligence and then solve important problems.”

Dominici said that Harvard’s breadth and size are key. Not only does the University have experts in the range of fields that will be needed, it also has a quantity of them, along with some of the necessary infrastructure already in place. And the Kempner Institute’s mission will require this kind of scale. It will take considerable resources and enormous intellectual capacity: not just scholars but communities of them in key areas; early career scientists pioneering not just knowledge but methods, tools, and techniques; top students eager to dive into the world’s toughest problems; and an array of disciplines that can come at the problem from different directions.

“Harvard is huge. I do think it has the capacity, the number of faculty that it can bring together, to really achieve what I consider probably one of the most ambitious goals in science that I have ever heard,” Dominici said.

In addition to her work applying statistical and AI methods to the world’s health problems, Dominici is heading, together with David Parkes, the George F. Colony Professor of Computer Science, a University-wide effort to understand something key to the advance of AI: data and the science of data. The Harvard Data Science Initiative, with more than 50 affiliated faculty, seeks to advance the rapidly emerging science of data — and the mathematics, statistics, and computer science needed to handle it. The discipline seeks to improve handling of the flood of information the modern era is producing, from Earth-gazing satellites to government document archives to the personal data piling up minute by minute in smart watches, cellphones, and browser histories.

With the institute’s ambitious goals, Harvard’s breadth and size are key, says Francesca Dominici, Clarence James Gamble Professor of Biostatistics, Population, and Data Science at the Harvard T.H. Chan School of Public Health.

Kris Snibbe/Harvard Staff Photographer

And Dominici pointed out that because AI algorithms are trained on data sets, their performance will only be as good — and unbiased — as the underlying data set.

“Artificial intelligence becomes artificial stupidity with bad data and bad data science,” she said.

Harvard’s expertise is not only in AI-related fields like data science and computer science — the second-largest undergraduate concentration — it also has researchers working on the other side of the Kempner Institute’s mission: probing the complexities of the brain, both at the Faculty of Arts and Sciences’ Center for Brain Science, which has more than 50 faculty affiliates, and Harvard Medical School’s Neurobiology Department, with about 40 faculty members.

Venkatesh Murthy, the Raymond Leo Erikson Life Sciences Professor of Molecular and Cellular Biology and Paul J. Finnegan Family Director of the Center for Brain Science, studies how the brain processes odor and then motivates behavior. Working with mice and ants, often with AI-based tools, Murthy explores odor processing because of its central importance in the animal kingdom. Olfaction may be important in the AI context, he said, because the neural networks involved are quite different than those involved in visual processing, an area where AI systems have been well-developed and are employed today in self-driving cars, for example.

“The idea is that the brain circuits that underlie this sense of smell have a very different architecture than the visual system,” said Murthy, another member of the new steering committee. “By understanding that, we one day may be able to say, ‘Oh, that structure, that architecture, is good for a certain kind of problem-solving.’ That’s the hope. Even though we are talking about a particular sense, we certainly hope this leads to some general principles.”

In fact, Murthy said, those like himself who are studying specific sensory pathways do so with the recognition that even though vision, sound, hearing, smell, and touch are processed differently in the brain, in living things they ultimately come together in some intelligent mix to motivate behavior. How animals learn using all these differing inputs seems quite different from how current AI systems learn.

“The early stages of these pathways are fairly separated out, but pretty soon things are mixed up,” Murthy said. “Maybe the larger question is, how does the brain organize this to keep it separate enough so that you can extract the real signal, but bring them together quickly enough so that you make better judgments, better inferences? I don’t doubt that when we go into general artificial-intelligence systems, taking these multiple streams of information has got to be a fundamental part of it.”

Beyond focusing on the scientific and technical aspects of AI, scholars at Harvard also have been studying and working to advance AI’s impact on society and culture through the lenses of law and government policy, business and economics, public health and medicine.

In March, researchers at Massachusetts General Hospital and Harvard Medical School developed AI to screen 80 currently available medications to see whether any might provide a shortcut to treating Alzheimer’s disease. One was promising enough that a clinical trial will be starting soon.

In September, gastroenterologists at HMS and Beth Israel Deaconess Medical Center used an AI-based computer-vision algorithm to backstop physicians looking at colonoscopy scans and found they lessened the chance a doctor would miss a potentially cancerous polyp by 30 percent. Across the University, researchers, faculty, and students are working to improve algorithms, boost computing power, and push the boundaries of what AI can do.

Harvard Law School’s metaLAB(at)Harvard has for several years used art to explore issues such as our unthinking dispersal of private data to tech giants via social media, AI’s ability to listen in on us with microphones on phones and computers — which we embrace in our use of personal assistants like Alexa and Siri — and even fundamental questions such as whether humans really should be the role model on which to pattern AI.

At Harvard Business School, the Managing the Future of Work project is studying the repercussions of the development and deployment of AI in things like industrial automation, while the wider business community dives headfirst into the AI future, spending tens of billions annually on AI-based systems.

Sheila Jasanoff, the Pforzheimer Professor of Science and Technology Studies at the Harvard Kennedy School and founder of the Program on Science, Technology and Society, said that STS training offers critical ethical insights to scientists studying the toughest problems facing the world today, not least something as defining to humanity as intelligence. Viewing science as something apart from the society that creates it, fosters it, and then uses its fruits is borderline dangerous, Jasanoff said, and the program, which has more than 50 faculty affiliates, mobilizes talent from across Harvard to build cross-disciplinary reflection.

When it comes to AI, Jasanoff said, key questions that might not be considered in the lab include not just which problems AI is designed to solve, but which problems it is not, and, importantly, why? What motivates the development of AI in the first place? And, if it’s merely money and consumer demand, are there harms associated with meeting — or even creating — that demand? How do we address the integration of AI into weapons of war, which, in essence, get robots to do killing we are unwilling to do ourselves? And to what extent are what appear to be near-universal attributes of human intelligence — such as culture, spirituality, and religion — integral to our broader understanding of intelligence?

“These are questions that tend to get silo-ized,” Jasanoff said. “The STS program is an attempt to understand better how the ways in which our societies are organized at multiple levels can give rise to the framings of scientific projects, and also their uptake in societies that have within them the seeds of future problems.”

Take the problem of bias in data. Experts agree that as AI finds its way into more parts of our lives we must be vigilant to ensure that we avoid using biased data, which could bake discrimination into systems that can be viewed as objective and impartial. For example, one well-known study by researchers at MIT and Stanford showed that three commercial facial-recognition programs had such problems, which resulted in much higher error rates in identifying gender for darker-skinned individuals. Unless care is taken, bias, whether from data gathered in a world steeped in it, or the conscious or unconscious prejudices of programmers as they work, can increase inequity.

Finale Doshi-Velez, Gordon McKay Professor of Computer Science and a member of the new steering committee, said that one way to assure equity is by creating diversity among students, fellows, teaching staff, and faculty working on this problem. Another way is by making sure ethics are taught in our classrooms, she said. Harvard’s Embedded EthiCS Program is a key avenue to get computer science students thinking about the ethical implications of work often thought of as purely technical, even though its impact once out in the world can affect things as diverse as approving a car loan, sentencing those accused of a crime, and passing resumes through initial screening.

The Embedded EthiCS Program seeks to embed ethical teaching into existing computer science courses by matching philosophy graduate students with course faculty and highlighting potential ethical issues in course topics. The goal is to reinforce to the hundreds of computer science students that the ethics of what they do — and what they get computers to do — should be an integral part of their everyday work, rather than an afterthought.

“When you think about all the things you need to make things work in the real world, in ways that are effective and ethical — all the boxes you’re trying to check — you can’t know all the complexities,” Doshi-Velez said. “But at a place like Harvard, you have experts in every one of those boxes.”