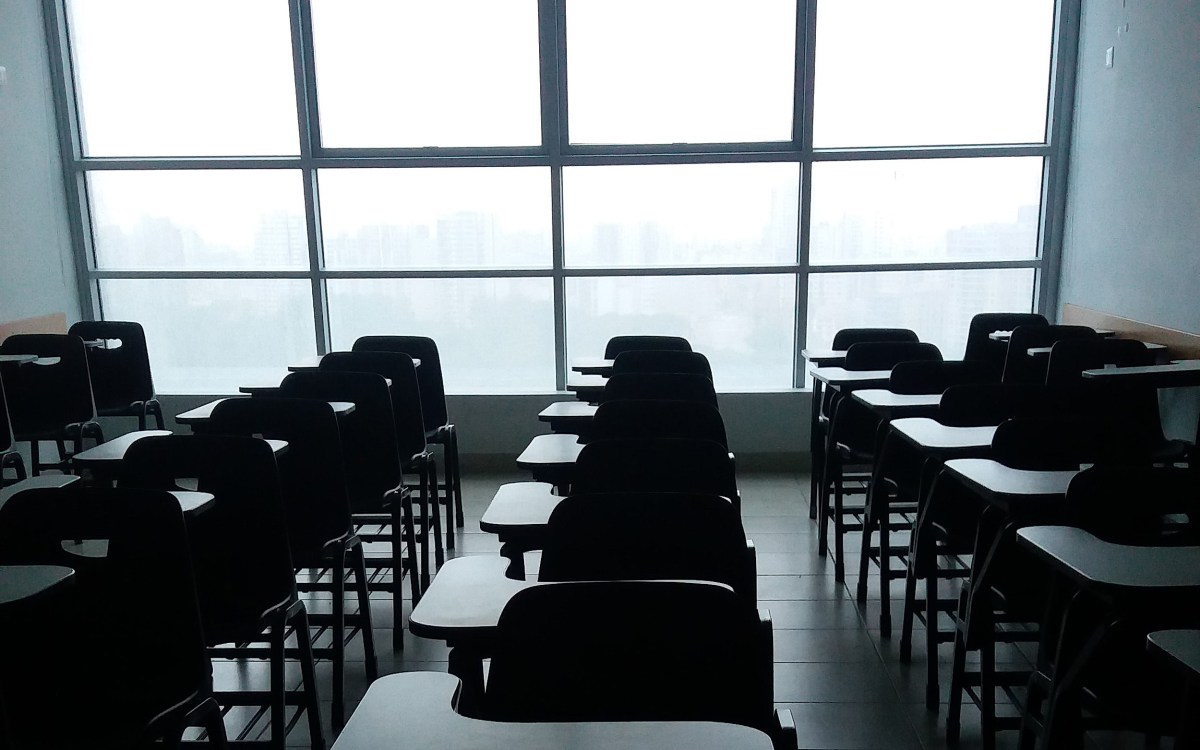

A new study looking at the efficacy of behavioral interventions for student involvement in online courses offers some suggestions on the road forward.

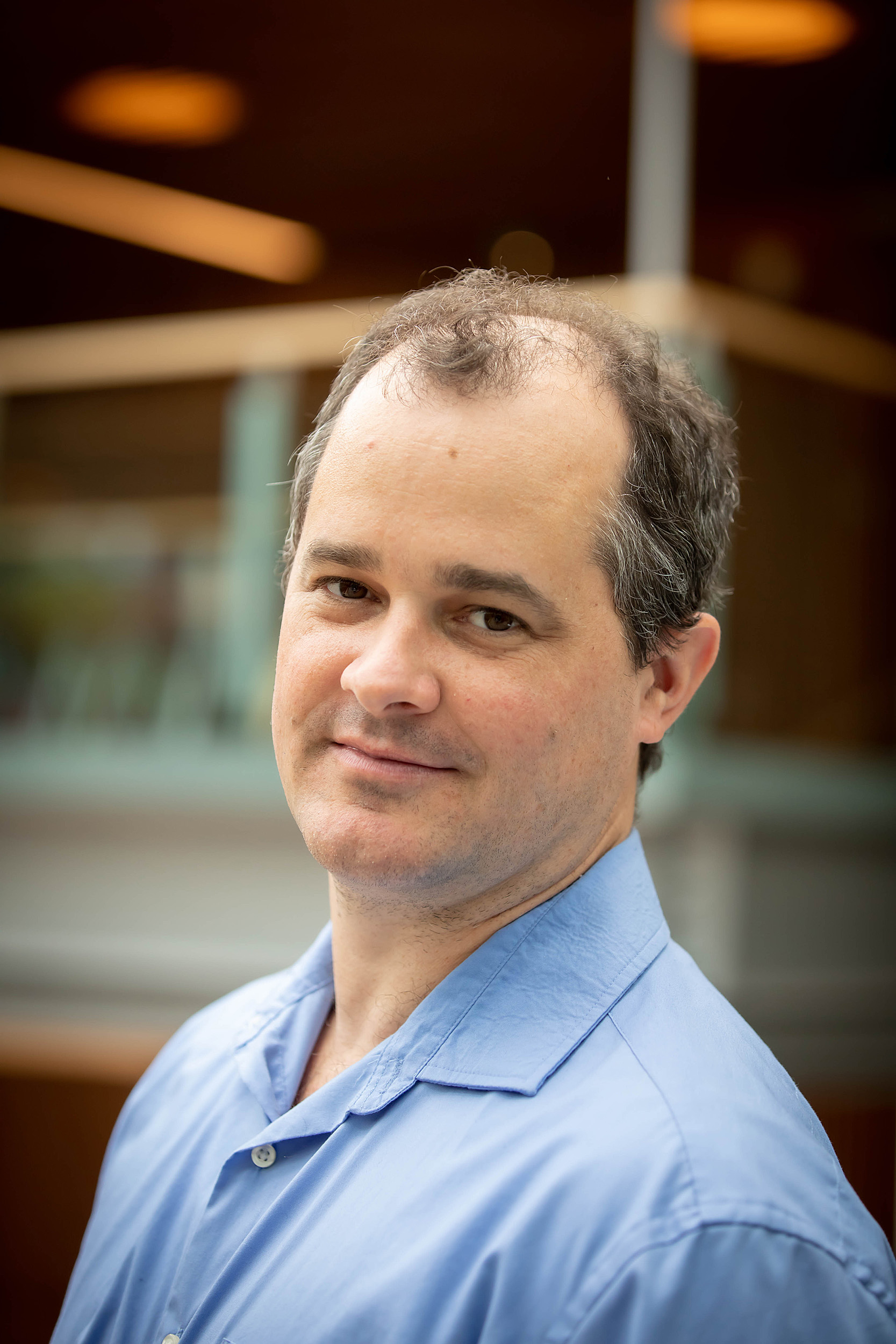

Kris Snibbe/Harvard Staff Photographer

Another disappointment for MOOCs

Study: Interventions to lift completion rates fall flat, but research points way to future inquiry

Hopes were sky-high about eight years ago when massive open online courses, known as MOOCs, emerged prominently on the scene. Proponents hoped the free offerings would democratize and revolutionize education by making high-quality courses available to anyone with a computer and internet access.

But it hasn’t worked out that way. Student completion rates have been poor, and a major new study looking at the efficacy of behavioral interventions, which had seemed to show some promise in shoring that up, didn’t prove to be a magic bullet. But, researchers say, the results offer some suggestions on the road forward, one that will grow increasingly important if online learning assumes a bigger role in the U.S. education system, as expected.

During a 2½ -year study, social scientists from Harvard, Carnegie Mellon University, Cornell University, Massachusetts Institute of Technology, Queensland University of Technology in Australia, University of Toronto, and Stanford University set out to prove at scale that the interventions — like surveys that help students plan for the course — could significantly improve completion rates.

From September 2016 to May 2019, researchers tracked a quarter of a million students from almost every country in about 250 online courses on the edX platform. The researchers hypothesized that five promising interventions given as pre-course surveys would have medium-to-large effects on boosting completion rates for the online classes.

The scientists expected the large-scale results would reinforce previous work done in smaller numbers (one study on 60,000 students receiving long-term planning interventions improved completion rates in MOOCs by as much as 29 percent for committed English-fluent students).

Here, researchers made predictions on how they thought each intervention would help certain subgroups of students. For example, they predicted the social accountability intervention, where students pick someone to hold them accountable for their progress in the course, would most benefit students in “less-individualistic countries.” But they found no significant improvement in overall completion rates from any of the five interventions tested. The results suggest that behavioral interventions are no panacea when it comes to online classes and that more research is needed to validate interventions that show significant promise by testing them at scale to see in what context they work, and for whom.

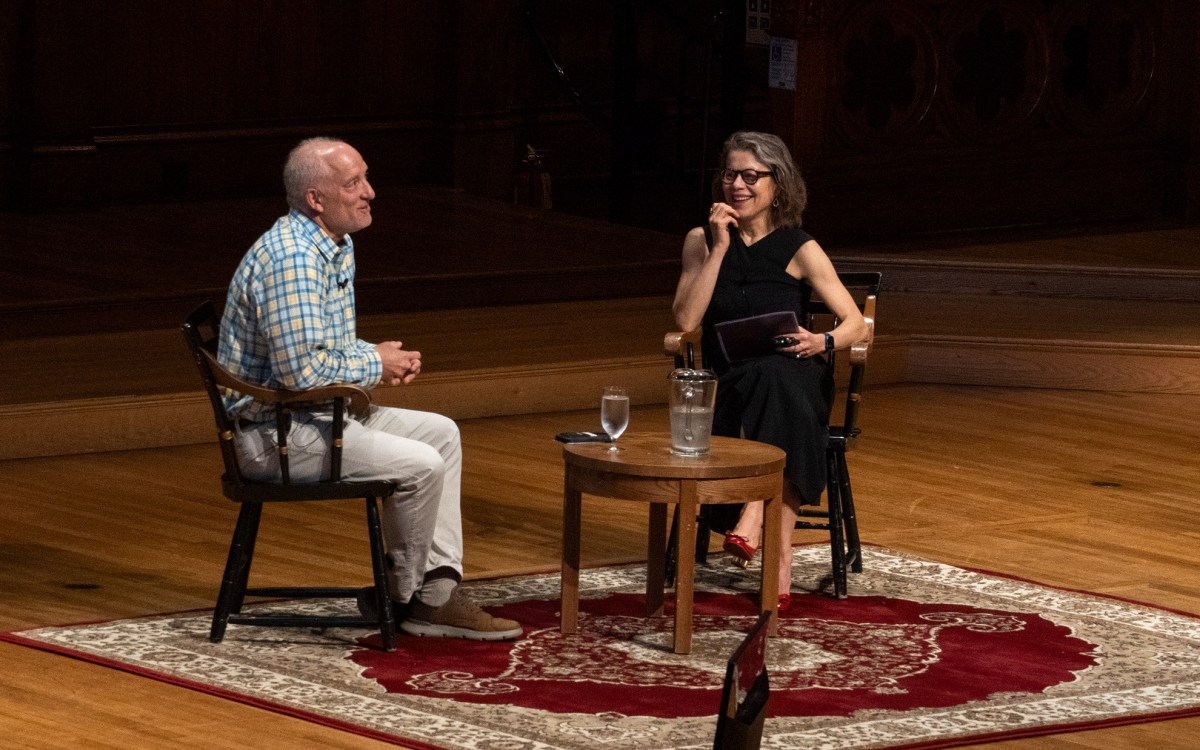

“It was a little bit surprising that they were less effective than initial results had indicated,” said one of the study’s co-authors, Dustin Tingley, a professor of government and deputy vice provost for advances in learning in Harvard’s Office of the Vice Provost for Advances in Learning (VPAL). “When you have these [initial] studies that show some promising results, it can be tempting to stop there and say, ‘We’ve now reached some conclusion,’ and a lot of academic work does exactly that.” This study shows that “When we see promising things, we have to ask what’s the next step that we take in order to explore whether or not that’s actually the case. Otherwise, people will read the initial studies and think, ‘Hey, here’s a magic bullet.’”

The study’s findings have added relevance as much of education moves online as a result of the novel coronavirus pandemic, but only to a certain extent since many MOOCs are free or low-cost and usually don’t lead to a degree.

“It’s a different learning context,” Tingley said. “It’s relevant insofar as it’s showing that it is difficult to increase the completion rate in the [online] setting. … It’s relevant in the broad sense of researchers thinking about low-cost ways to nudge people to have more-effective learning experiences. … As with COVID, online education is not going to go away. Even post-COVID, I think, people realize that we need to be preparing for these types of learning settings. If that’s the case, then we need to take seriously rigorous research about how people learn online.”

The study was published in the Proceedings of the National Academies of Sciences in June. It was led by Rene Kizilcec, an assistant professor at Cornell; Justin Reich, an assistant professor at MIT and a former research fellow at Harvard; and Michael Yeomans, who was a postdoctoral fellow at Harvard Business School during the study and is now an assistant professor at Imperial College Business School.

The research was based on data from 247 online courses offered by Harvard, MIT, and Stanford with 269,169 registered students. The researchers preregistered their hypotheses and plans for analyzing the data at a repository hosted by the Open Science Foundation.

Students were randomly assigned to receive one of the interventions being studied after answering survey questions about themselves. Some students received none as a control.

The five interventions that researchers used included three “self-regulation” interventions — which all involved planning ahead — and a motivational activity, called a “value-relevance” intervention, that asked students to indicate important values to them and write about how completing the course reflected that.

While the study’s main results were disappointing, the paper’s authors point to some silver linings in the process and data.

“We have to ask what’s the next step … Otherwise, people will read the initial studies and think, ‘Hey, here’s a magic bullet.’”

Dustin Tingley

“Part of our job is to try to do research that helps learners achieve their goals and part of our job is to be as transparent, candid, and rigorous with policymakers about how effective the things that we design are,” said Reich, who’s also a former lecturer at the Harvard Graduate School of Education, and discusses the study further in a forthcoming book. “On the one hand, it doesn’t feel great to have to go back to everyone and say these things don’t work as well as we had hoped they did. But on the other hand, it feels good to be able to say we did a really rigorous study to get some good precise estimates on how effective these things are.”

In the self-regulation interventions, like the social accountability or long-term planning interventions, researchers found they were effective in raising student engagement in the first few weeks but that effect fell off as the course went on.

They also found that the value-relevance intervention helped improve completion rates among students from less-developed countries by almost 3 percent in both years of the study, but that it only happened in courses where there was already a large difference in completion rates between students in less-developed countries and students in more-developed countries. In some courses that did not have this global achievement gap, the interventions actually had the opposite effect.

The researchers tried using machine-learning algorithms to optimize interventions for the second year based on the first year’s data. The results were no better than not assigning interventions or randomly assigning them to all.

The overall findings led the scientists to suggest that further study is necessary to pinpoint when these interventions work and for whom they work. Efforts should also include building the data-processing infrastructure needed to run these types of data-intensive studies as Harvard’s VPAL office has, Tingley said.

“Context matters,” the authors wrote in the study. “In a new paradigm, the question of ‘what works?’ would be replaced with ‘what works, for whom, right here?’”