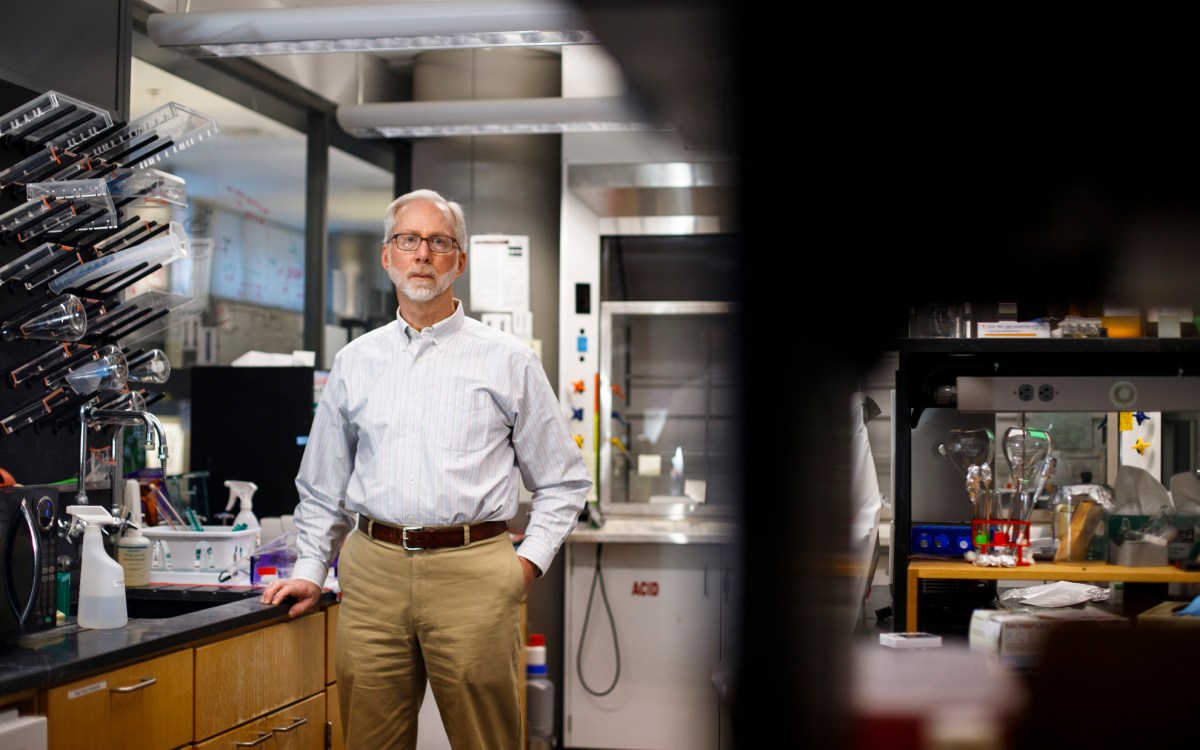

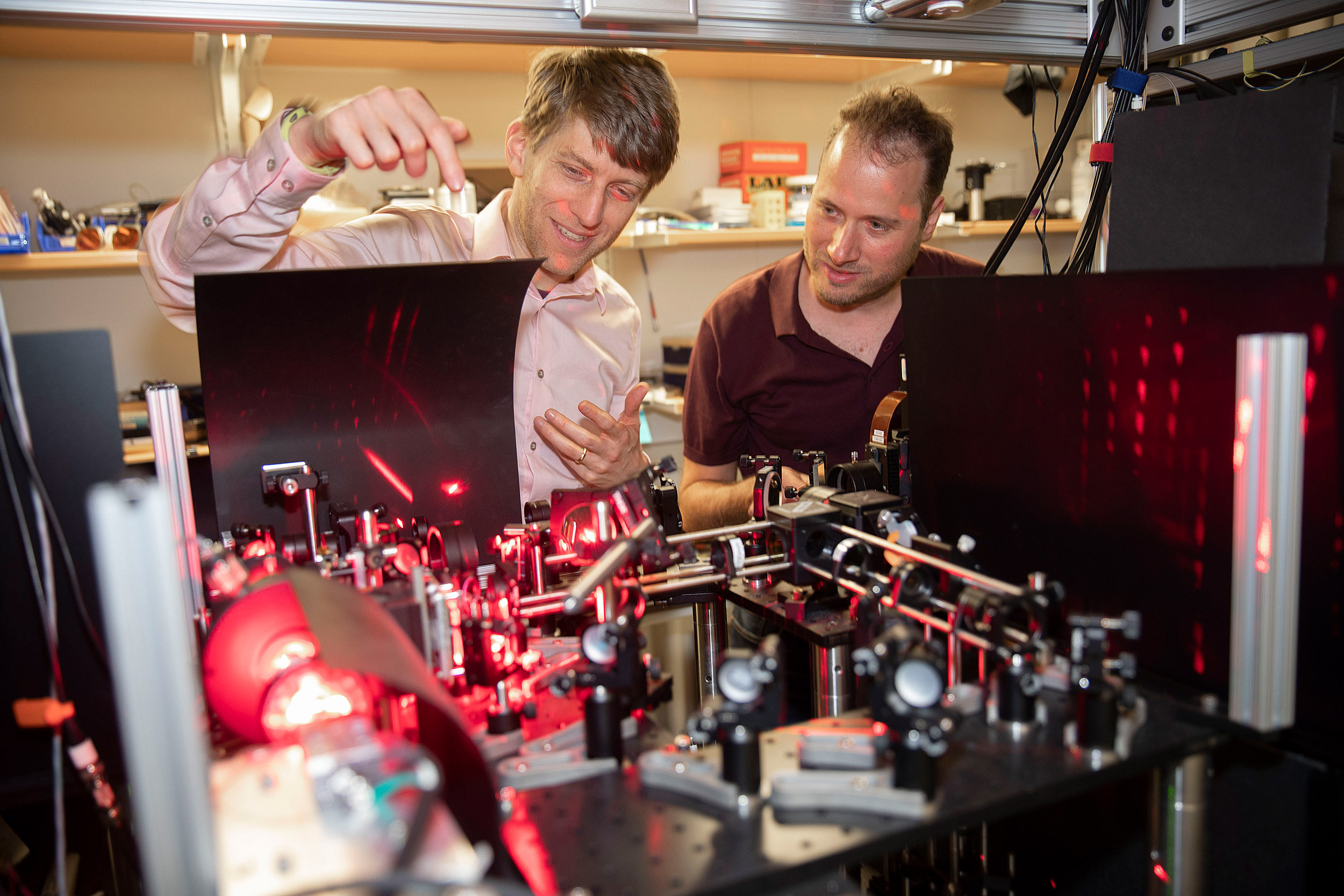

Researchers Adam Cohen (left) and Yoav Adam have found a way to watch a live broadcast of neurons firing in real time — with mice.

Photos by Kris Snibbe/Harvard Staff Photographer

A new vision for neuroscience

Live recordings of neural electricity could revolutionize how we see the brain

Red and blue lights flash, and a machine whirs like a distant swarm of bees. In a cubicle-sized room, Yoav Adam captures something no one has ever seen before: neurons flashing in real time, in a walking, living creature.

For decades scientists have been searching for a way to watch a live broadcast of the brain. Though neurons send and receive massive amounts of information (Toe itches! Fire hot! Garbage smells!) at speeds of up to 270 miles an hour, the brain’s electricity is invisible.

“You can’t see the electricity flowing through the neurons any more than you can see the electricity in a telephone wire,” said Adam Cohen, professor of chemistry and chemical biology and of physics at Harvard. So, to observe how neurons turn information (toe itches) into thoughts (“itching powder”), behaviors (scratching), and emotions (annoyance), we need to change the way we see.

In a new study published in Nature, Cohen does just that.

With first author and postdoctoral scholar Yoav Adam and a multi-institutional, cross-disciplinary research team, Cohen sheds literal light on the brain, transforming neural signals into sparks visible through a microscope.

Those sparks come from a protein called archaerhodopsin. When illuminated with red light, the protein can turn voltage into fluorescence (this and similar tools are known as genetically encoded voltage indicators, or GEVIs). Like an ultrasensitive voltmeter or the hair on your arm, archaerhodopsin changes form when it gets a jolt.

The Cohen team paired this with a similar protein that, when illuminated with blue light, causes neurons to fire. “This way,” Adam said, “we can both control the activity of the cells and record the activity of the cells.” Blue light controls; red light records.

The protein pair worked well in neurons outside the brain, in a dish. “But,” Cohen said, “the Holy Grail was to get this to work in live mice that are actually doing something.”

They finally found their grail after five years of intense collaboration between 24 neuroscientists, molecular biologists, biochemists, physicists, computer scientists, and statisticians. First, they tweaked the protein to work in live animals; then, with some adept genetic manipulation, they positioned the protein in the right part of the right cells in the mouse brain. Finally, they built a new microscope, customized with a video projector to shine a pattern of red and blue light into the live mouse’s brain, and onto specific cells of interest.

“You basically make a little movie,” Cohen said.

With red and blue light patterned on the brain, Adam can control which neurons fire when and can capture their electric activity as light. To identify individual neural signals in the bright chaos, the team designed one final tool: a software program that can extract specific neural sparks, like finding individual fireflies in a swarm.

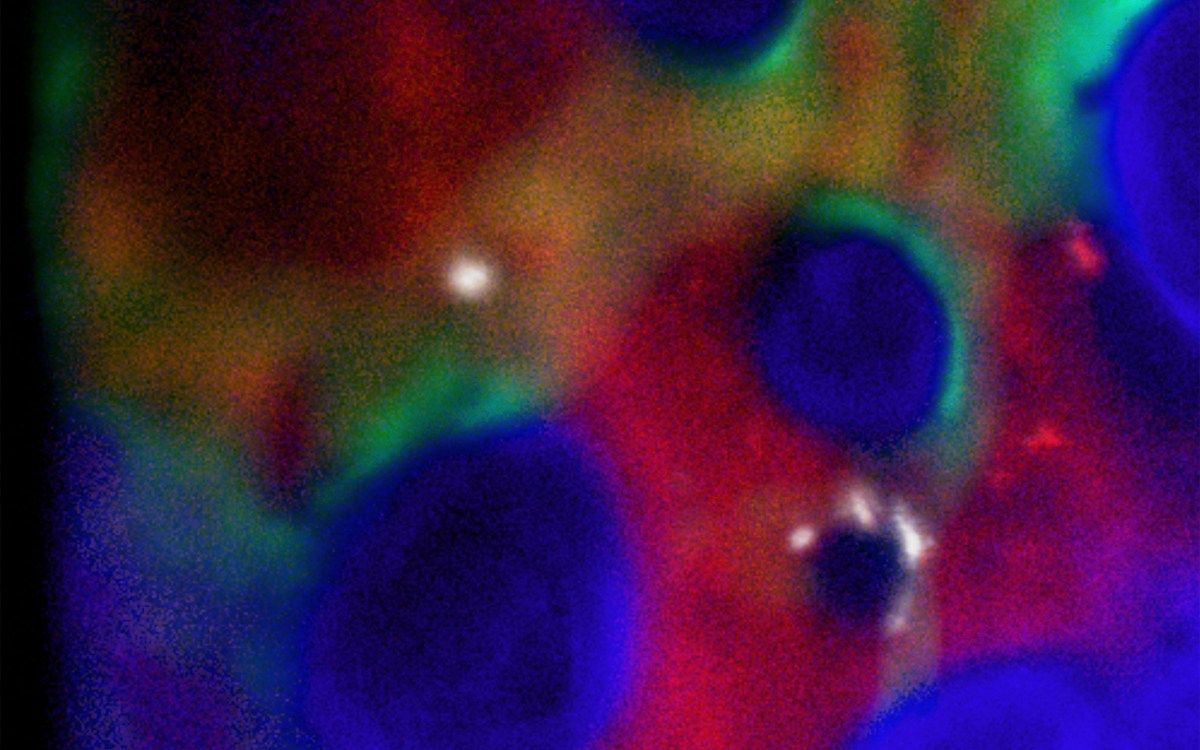

An electrical spike shoots through a single neuron in milliseconds. Neural signals can travel from cell to cell at speeds up to 270 miles per hour, but the Cohen Lab can catch them.

Courtesy of Cohen Lab

But the team’s advances would not be possible without a 1980s ecological survey. In the dense salt of the Dead Sea, an Israeli ecologist found a microorganism called Halorubrum sodomense that performs a neat trick: converting sunlight into electrical energy in a primitive form of photosynthesis. For almost 30 years, the organism and its talented protein (archaerhodopsin 3) floated undisturbed.

Then, in 2010, researchers at Massachusetts Institute of Technology got a tiny tool to convert light into electricity while embedded in a brain. With just a little light, the researchers could force a neuron to fire and, if they chose well, even manipulate an animal’s behavior.

Cohen was impressed, and MIT’s success made him wonder: Could we reverse the trick? Could the protein convert the electrical activity of neurons into detectable flashes of light? After many years of collaborations, failures, genetic manipulations, and mini victories, he finally got a mighty, if simple, answer: Yes.

Cohen is not the first to record neural signals: Hair-thin glass tubes inserted into brain tissue can get the job done as well. But such devices record only one or two neurons at a time and, like a splinter, must be removed before they cause damage. Other tools monitor calcium, which floods neurons when they fire. But, according to Cohen, “depending on exactly how you do it, it’s 200 to 500 times slower than the voltage signal that Yoav is looking at.”

In a third of the time it takes to blink, the Cohen team can capture a precise image of a neuron’s spike pattern, like recording the fine details of a firefly’s wings midflight. They can record up to 10 neurons at a time, a feat otherwise impossible with existing technologies, and, three weeks later, find the same exact neurons to record anew.

Recently, Adam has expanded his vision to examine how behavioral changes impact neural chatter. For his first attempt, he started simple: A mouse walked on a treadmill for 15 seconds and then rested for 15. During both stages, Adam projected blue and red light onto the hippocampus region of the brain, a hub for learning and memory.

“Even just with simple changes in behavior — walking and resting — we could see robust changes in the electrical signals, which also varied between different types of neurons in the hippocampus,” Adam said.

Next, the Cohen team will add more complexity to the mouse’s treadmill environment: rough Velcro circles, whisker flicks, and a sugar station. Adam, in particular, wants to learn more about spatial memory — for example, can the mouse remember where to find the sugar station? “No one knows what a memory really looks like,” Cohen said. Soon, we might.

In the meantime, the team members will continue to sort through their intricate data and improve their optical, molecular, and software tools. Better tools could capture more cells, deeper brain regions, and cleaner signals. “A mouse brain has 75 million cells in it,” Cohen said, “so depending on your perspective, we’ve either done a lot or we still have quite a long way to go.”

But they will keep pushing forward. Despite five years of hard work, the end result always looked possible: “I could see the light,” Adam said.