A team of computer graphics experts at Harvard developed a software tool that achieves two things: It identifies the ideal locations for the action figure’s joints, based on the character’s virtual articulation behavior, and then it optimizes the size and location of those joints for the physical world. Then the 3-D printer sets to work, and out comes a fully assembled, robust, articulated action figure, bringing the virtual world to life.

Image courtesy of Moritz Bächer

Action figures come to life

Tool developed at Harvard adds ‘3-D print’ button to animation software

Watch out, Barbie: Omnivorous beasts are assembling in a 3-D printer near you.

A group of graphics experts led by computer scientists at Harvard have created an add-on software tool that translates video game characters — or any other three-dimensional animations — into fully articulated action figures, with the help of a 3-D printer.

The project is described in detail in the Association for Computing Machinery (ACM) Transactions on Graphics and will be presented at the ACM SIGGRAPH conference on Aug. 7.

In addition to its obvious consumer appeal, the tool constitutes a remarkable piece of code and an unusual conceptual exploration of the virtual and physical worlds.

“In animation you’re not necessarily trying to model the physical world perfectly; the model only has to be good enough to convince your eye,” explains lead author Moritz Bächer, a graduate student in computer science at Harvard’s School of Engineering and Applied Sciences (SEAS). “In a virtual world, you have all this freedom that you don’t have in the physical world. You can make a character so anatomically skewed that it would never be able to stand up in real life, and you can make deformations that aren’t physically possible. You could even have a head that isn’t attached to its body, or legs that occasionally intersect each other instead of colliding.”

Returning a virtual character to the physical world therefore turns the traditional animation process on its head, in a sort of reverse rendering, as the image that’s on the screen must be adapted to accommodate real-world constraints.

Bächer and his co-authors demonstrated their new method using characters from Spore, an evolution-simulation video game. Spore allows players to create a vast range of creatures with numerous limbs, eyes, and body segments in almost any configuration, using a technique called procedural animation to quickly and automatically animate whatever body plan it receives.

As with most types of computer animation, the characters themselves are just “skins” — meshes of polygons — that are manipulated like marionettes by an invisible skeleton.

“As an animator, you can move the skeletons and create weight relationships with the surface points,” says Bächer, “but the skeletons inside are nonphysical with zero-dimensional joints; they’re not useful to our fabrication process at all. In fact, the skeleton frequently protrudes outside the body entirely.”

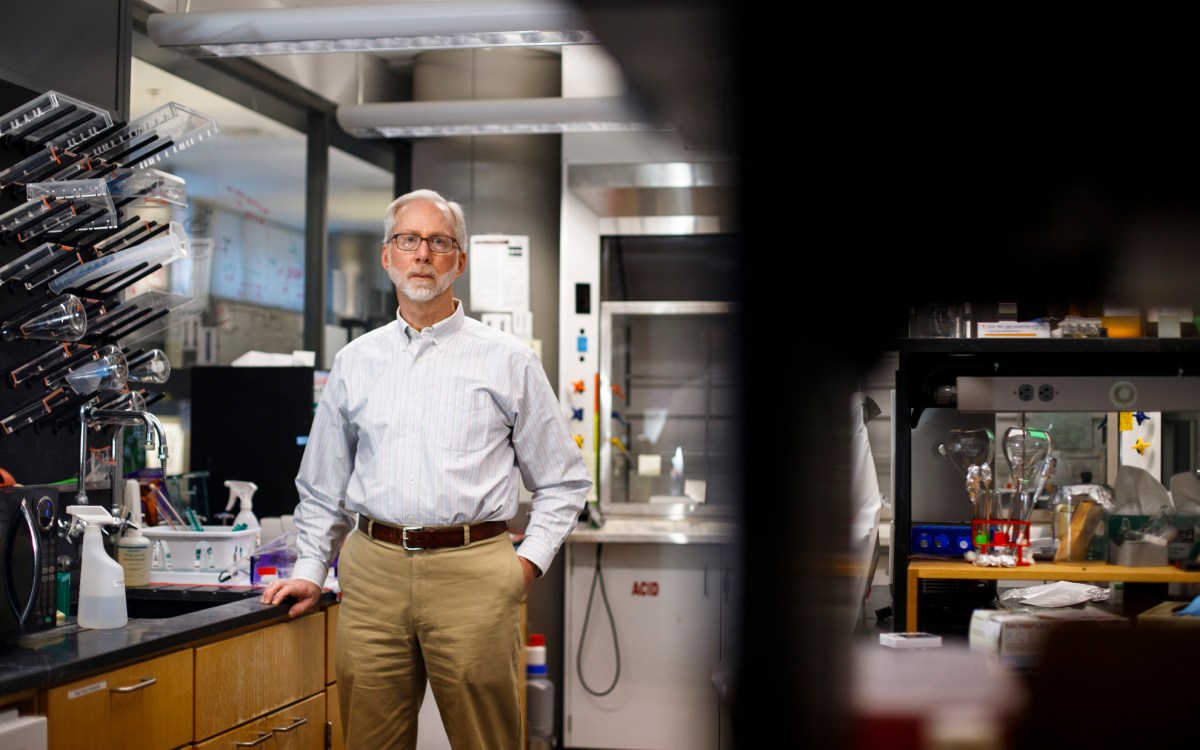

Bächer tackled the fabrication problem with his Ph.D. adviser, Hanspeter Pfister, Gordon McKay Professor of Computer Science at SEAS. They were joined by Bernd Bickel and Doug James at the Technische Universität Berlin and Cornell University, respectively.

This team of computer graphics experts developed a software tool that achieves two things: It identifies the ideal locations for the action figure’s joints, based on the character’s virtual articulation behavior, and then it optimizes the size and location of those joints for the physical world. For instance, a spindly arm might be too thin to hold a robust joint, and the joints in a curving spine might collide with each other if they are too close.

The software uses a series of optimization techniques to generate the best possible model, incorporating both hinges and ball-and-socket joints. It also builds some friction into these surfaces so that the printed figure will be able to hold its poses.

The tool also perfects the model’s skin texture. Procedurally animated characters tend to have a very roughly defined, low-resolution skin to enable rendering in real time. Details and textures are typically added through a type of virtual optical illusion: manipulating the normals that determine how light reflects off the surface. In order to have these details show up in the 3-D print, the software analyzes that map of normals and translates it into a realistic surface texture.

Then the 3-D printer sets to work, and out comes a fully assembled, robust, articulated action figure, bringing the virtual world to life.

“With an animation, you always have to view it on a two-dimensional screen, but this allows you to just print it and take an actual look at it in 3-D,” says Bächer. “I think that’s helpful to the artists and animators, to see how it actually feels in reality and get some feedback. Right now, perhaps they can print a static scene, just a character in one stance, but they can’t see how it really moves. If you print one of these articulated figures, you can experiment with different stances and movements in a natural way, as with an artist’s mannequin.”

Bächer’s model does not allow deformations beyond the joints, so squishy, stretchable bodies are not yet captured in this process. But that type of printed character might be possible by incorporating other existing techniques.

For instance, in 2010, Pfister, Bächer, and Bickel were part of a group of researchers who replicated an entire flip-flop sandal using a multimaterial 3-D printer. The printed sandal mimicked the elasticity of the original foam rubber and cloth. With some more development, a later iteration of the “3-D-print” button could include this capability.

“Perhaps in the future someone will invent a 3-D printer that prints the body and the electronics in one piece,” Bächer muses. “Then you could create the complete animated character at the push of a button and have it run around on your desk.”

Harvard’s Office of Technology Development has filed a patent application and is working with the Pfister lab to commercialize the new technology by licensing it to an existing company or by forming a startup. Their near-term areas of interest include cloud-based services for creating highly customized, user-generated products, such as toys, and for enhancing existing animation and 3-D printer software with these capabilities.

The research was supported by the National Science Foundation, Pixar, and the John Simon Guggenheim Memorial Foundation.